Research Article - Journal of Psychology and Cognition (2017) Volume 2, Issue 1

The effect of presentation time and working memory load on emotion recognition.

Tsouli A, Pateraki L, Spentza I, Nega C*

The American College of Greece, Greece

- *Corresponding Author:

- Chrysanthi Nega

The American College of Greece

Greece

Tel: +302106009800; Ext 1612

E-mail: cnega@acg.edu

Accepted date: February 15, 2017

Citation: Tsouli A, Pateraki L, Spentza I, et al. The effect of presentation time and working memory load on emotion recognition. J Psychol Cognition. 2017;2(1):61-66.

DOI: 10.35841/psychology-cognition.2.1.61-66

Visit for more related articles at Journal of Psychology and CognitionAbstract

Current research on the role of cognitive resources on emotional face recognition provides inconclusive support for the automaticity model. The purpose of the present study was to examine the effect of working memory load and attentional control on emotion recognition. Participants (N=60) were shown photographs of fearful, angry, happy and neutral faces for 200 ms, 700 ms or 1400 ms while engaging in concurrent working memory load task. A restricted response time was employed. Data analysis revealed that presentation time did not affect the reaction time across emotions. Furthermore, reactions times were marginally affected by graded load. Reaction times to fear and anger were significantly greater compared to other emotions across load conditions. These findings are relatively congruent with the automaticity theory and negativity bias, suggesting that efficient emotion recognition can occur even in the expenditure of working memory processes, whereas longer reaction time for negative stimuli indicates the partial involvement of higher cognitive processes that are necessary for evaluating potential threats. It is suggested that although processing negative emotional faces can be carried out automatically, at the same it requires sufficient attention in order to be executed.

Keywords

Automatic attention, Emotion recognition, Cognitive load, Negativity bias.

Introduction

Research on emotional expressions has a long tradition, with Darwin being the first to highlight the evolutionary importance of primary emotions (fear, anger, disgust, sadness, happiness and surprise) described as “species specific” [1]. The evolvement of these emotions is assumed to facilitate the adaptation of organisms to recurrent environmental stimuli to the conveyance of crucial social information [2-6]. More specifically, facial emotion recognition serves an essential communicative function which manifests itself in the ability to interpret the mental states of others. This interpretation involves the decoding of emotional expressions’ meaning, the weighting of their importance to the self and others, and their association to subsequent verbal discourse and action [7,8]. It becomes evident that simultaneous processing of such manifold information necessitates fast and efficient cognitive operations which are made possible through their reliance upon a system of interconnected processing levels. This system is what allows the manipulation, evaluation and decision making steps comprising emotional cognition and is the so-called working memory (WM).

An elucidating paradigm adopted to distinguish the specific contributions of WM in entangling cognitive operations is the engagement in two concurrent tasks which need to be performed simultaneously or the introduction of a secondary task which competes for the processing resources of the primary one. Given limited capacity of the attention system [9,10], the dual-task methodology aims to examine the effect of attentional load on the primary task which is characterized by decreased performance due to the interference produced by the secondary task [7]. This decrement in performance is highly moderated by the degree of similarity and difficulty of the two antagonizing tasks [11,12].

There is evidence for impaired emotion labelling when performed concurrently to a WM task (usually the n-back task) with negative or threat-relevant emotions adversely affecting accuracy and reaction time on the tasks, suggesting that emotion recognition is tightly intertwined with higherorder cognitive processes [13-16]. Conversely, other studies adopting similar rationale have found that cognitive load does not significantly interfere with affect recognition and support that emotion processing is automatic and independent of working memory resources [17,18]. However, these studies have failed to control the stimulus presentation duration and response time.

Neuroimaging data suggest that differential brain activation during emotion recognition tasks seems to be modulated by the attentiveness of the stimulus, with amygdaloid activation relating to implicit emotion recognition, whereas prefrontal and frontoparietal network activation taking place during explicit emotional processing and under high cognitive demand caused by concurrent working memory load [14,19- 25]. Hence, taking into consideration that spontaneous facial expressions are particularly brief during social interactions [26,27], a rapid stimulus presentation so as to achieve a relatively “preattentive” processing, in combination with graded memory load, appears to be a promising methodology to illustrate whether emotional perception is indeed autonomous and efficient regardless of depleted memory resources and processing time.

In line with the aforementioned evidence, the aim of the present study was to elucidate the perceptual and cognitive processes underlying the intricate association of working memory and facial affect recognition via the manipulation of attentional resources and the induction of graded cognitive load. This dual-task paradigm has been commonly adopted in studies investigating bottom-up cognitive processing and can demonstrate the relatively unaffected processing resources allocated to one of the two tasks which is assumed to be automatic due to its resistance to interference [7,12,28].

No previous study to our knowledge has tested whether differential cognitive load can adversely interfere with the efficiency of facial affect recognition by manipulating the word length effect [29,30]. Word length can be systematically manipulated (short vs. long words) and subsequently lead to differential momentary depletion of cognitive resources required for the primary task the performance of which is characterized by decreased response accuracy and increased reaction time [31-34]. The present study used graded working memory load during the emotion recognition process by including a secondary task. Specifically, participants were asked to repeat a word aloud and simultaneously recognize the emotional expression of a target face. It was hypothesized that if facial affect recognition is indeed automatic and independent of conscious control, the performance on emotion recognition task would not be adversely affected either by the concurrently performed working memory task or the duration of the stimulus presentation [18,35]. Conversely, if emotion recognition is dependent on working memory resources, then cognitive load would interfere with performance and more errors would be produced across presentation durations [15,36].

Method

Participants

A sample of volunteered 60 undergraduate students (30 males and 30 females; aged between 18 and 26; Mage=21.0, SD=2.07) attending the American College of Greece was randomly selected.

Materials

The experiment was designed using the E-prime psychology software tools, Inc. and run on a Dell OptiPlex 990 desktop. Three black and white photographs depicting fear, anger, happiness and one neutral adopted from the Facial Action Coding System Manual [37]. The pool of words used consisted of 48, one or three syllables long English words selected from the MRC Psycholinguistic Database [38], after being checked and equated in for neighborhood effects, for phonological, semantic and orthographic similarities, as well as valence and arousal [39,40]. These were equally divided for presentation in the low or high cognitive load condition whereas 12 additional words were used in the practice trials prior to the experiment.

Procedure

A mixed factorial design was employed with participants being randomly assigned to one of the three presentation time conditions (200 ms, 700 ms and 1400 ms). The within participants variables were the emotion recognition task (fearful, angry, happy, neutral) and the graded cognitive load, operationally defined as no load (no word presented), low (one syllable long word) and high (three syllables long word). Measurements were obtained on the basis of correct identification of the emotional label, and reaction time measured in milliseconds (ms), from the moment the emotion label appeared on the screen until the participant provided an answer. To ensure that the performance on the WM load task was not undermined by the concurrent emotion recognition according to the dual task paradigm, the accuracy on the concurrent WM task was also examined.

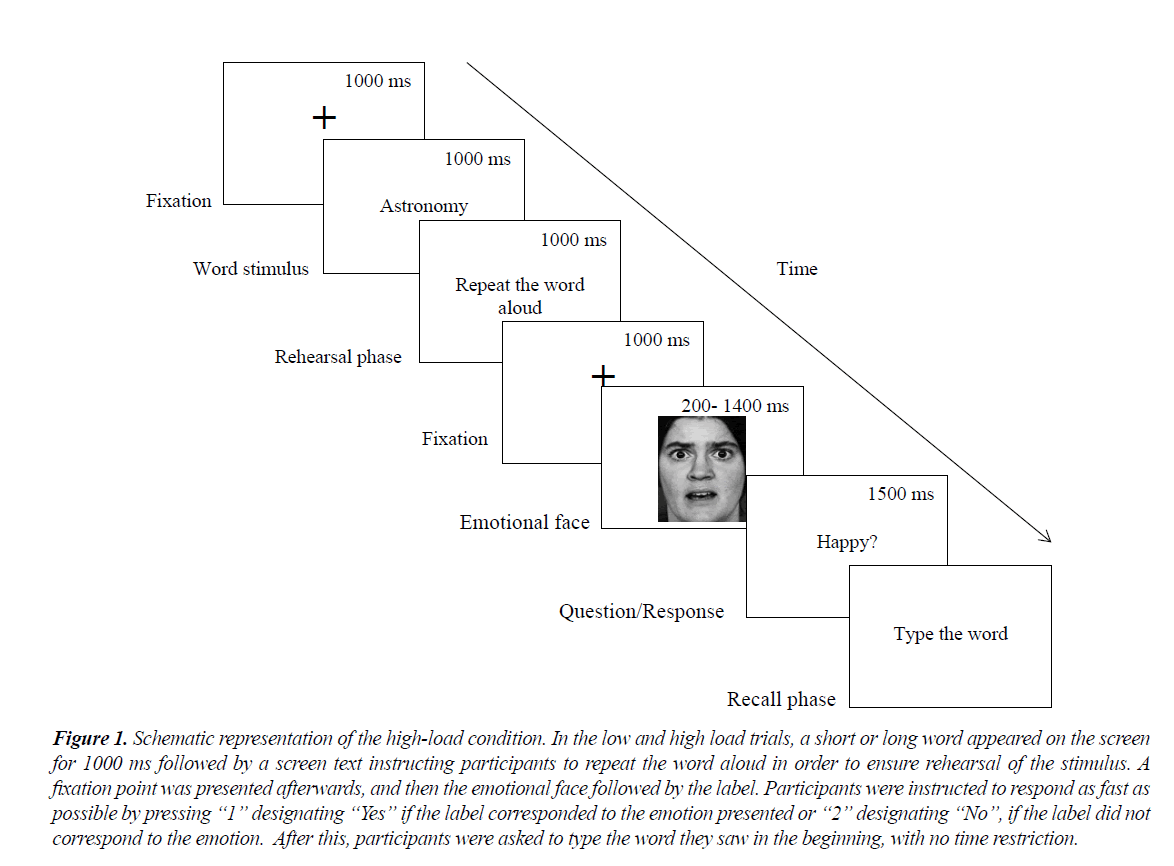

In all conditions, participants sat approximately 27 in. from a 24 inch computer screen. Each experimental session entailed 72 trials, with 24 trials corresponding to each load condition where each of the four emotions was presented six times and followed by three correct and three incorrect labels. In the no load trials, a blank screen was presented for 1 s followed by a fixation point lasting for 1 s and a photograph of an emotional face (fearful, angry, happy, neutral) which was displayed for either 200 ms, 700 ms or 1400 ms, depending on the presentation time condition participants were assigned to. The 200 ms presentation time was adapted from the study of Calvo and Lundqvist who used durations ranging from 25 ms to 500 ms for the presentation of the emotional stimuli while the 700ms presentation time was similar to the duration used by Getz et al. who displayed emotional stimuli for 500 ms, 750 ms or 1000 ms [26,27]. Given the scarcity of relevant literature on the matter, and taking into account that facial expressions tend to last for less than 1 s in real life, we used 1400 ms for the control condition to allow a more elucidating comparison with the other two presentation conditions. The label of the emotion appeared afterwards (“happy”) and participants were instructed to respond as fast as possible by pressing “1” designating “Yes” if the label corresponded to the emotion presented or “2” designating “No”, if the label did not correspond to the emotion. The “1” key was clearly marked with a green sticker and the “2” key with a red sticker. The experimental tasks were counterbalanced to take into account the emotional face, the graded cognitive load and the correct/incorrect label trials. Response time was restricted to 1500 ms and neither recognition accuracy nor response time data were recorded for the trials in which participants failed to respond within the time limit. The 1500 ms was chosen as maximum response time since a pilot study indicated that this time restriction encouraged the participant to respond as quickly as possible without producing feelings of frustration. For a schematic representation of a sample trial (Figure 1). Word stimuli, emotional faces and labels were randomly presented in each experimental session. Twelve practice trials were introduced before the beginning of the actual experiment in order for participants to become familiarized with the procedure.

Figure 1. Schematic representation of the high-load condition. In the low and high load trials, a short or long word appeared on the screen for 1000 ms followed by a screen text instructing participants to repeat the word aloud in order to ensure rehearsal of the stimulus. A fixation point was presented afterwards, and then the emotional face followed by the label. Participants were instructed to respond as fast as possible by pressing ?1? designating ?Yes? if the label corresponded to the emotion presented or ?2? designating ?No?, if the label did not correspond to the emotion. After this, participants were asked to type the word they saw in the beginning, with no time restriction.

Results

In the present study data analyses were conducted on participants’ RTs (m/s) and recognition accuracy (%). RTs, obtained from correct responses only, were analyzed for the six trials of each emotion. Similarly, percentage of correct responses was analyzed for the six trials of each emotion.

Two 3 × 4 × 3 mixed ANOVAs were conducted to evaluate the effect of presentation time (200 vs. 700 vs. 1400), cognitive load (no vs. low vs. high) and emotion (fear vs. angry vs. happy vs. neutral) on RT and accuracy, with presentation time being the between participants variable.

Reaction time

The analysis revealed a statistically significant effect of emotion, F (3, 171)=94.08, p<0.001=0.000, η2 p=0.62, a statistically significant effect of load, F (2,114)=28.77, p<0.001=0.000, η2 p=0.33 and a non-significant effect of presentation time, F (2, 57)=0.894, p=0.42,η2 p=0.03.

There was a statistically significant interaction between emotion and load, F (6,342)=2.60, p <0.05=0.018,η2 p=0.04, indicating that in the Anger and Happy emotions, participants responded faster in the Low Load condition compared to the High and No load condition, whereas in the Fear emotion participants responded faster in the High Load condition compared to the Low and No Load conditions. All other interactions failed to reach statistical significance.

Post-hoc comparisons with Bonferroni correction revealed that RT for fear (M=853.32, SE=16.34) and anger (M=803.34, SE=16.60) was statistically significant slower compared to happy (M=674.14, SE=16.59) and neutral (M=780.19, SE=17.73), with happy displaying a statistically significant faster RT compared to all other emotions.

Post-hoc comparisons with Bonferroni correction revealed that RT in the absence of cognitive load was significantly slower (M=808.88.14, SE=15.91) compared to the low load (M=760.03, SE=15.69) and high load (M=764.34, SE=14.10), whereas there was no statistically significant difference on RT between low and high load conditions.

Recognition accuracy

There was a statistically significant effect of emotion, F (3, 171)=11.48, p<0.001=0.000, η2 p=0.17. However, the effect of Load [F (2, 114)=1.28, p>0.05=0.28, η2 p=0.02] and Presentation Time [F (2,57)=0.14, p=0.87, η2 p=0.005] was not significant. None of the interactions were found to be significant.

Post-hoc comparisons with Bonferroni correction revealed that RA for fear (M=5.25, SE=0.10), anger (M=5.34, SE=0.08) and neutral (M=5.29, SE=0.09) emotions were statistically significant less compared to happy (M=5.74, SE=0.04) but not significantly different among each other.

The ratio of RT over accuracy was also estimated and introduced in the analysis as an outcome variable for exploring the impact of accuracy on response speed (RT/ RA). However, results revealed exactly the same pattern.

Discussion

The present study investigated the effect of graded cognitive load and presentation time on facial affect recognition. One of the most interesting findings is that despite the brief presentation of emotional faces and the restricted response time, concurrent working memory load did not impair the reaction times and accuracy on the emotion recognition task. Accurate emotion processing under brief display durations is further supported by Calvo and Lundqvist [26], who found that emotion identification occurs efficiently when emotions were displayed for 250 ms and 500 ms, respectively; and Getz et al. who also found no effect of presentation time on performance on different computerized facial affect and facial recognition tasks both in healthy participants and bipolar patients [27].

Taking into account that the graded memory load performed concurrently with the emotion recognition task did not impair the speed and accuracy on both tasks, our findings are seemingly congruent with the automaticity theory of emotional perception and are in agreement with the findings of Tracy and Robins [18], and others manipulating attentional [12] or memory processes [41]. Nonetheless, and given the variations in reaction time, with slower performance being recorded in the no load condition, it could be conversely argued that emotional processing is not unambiguously involuntary and independent from “top-down” processes, such as attention and task instructions [36]. More specifically, and according to Erthal et al. attention facilitates the selective augmentation of visual stimuli perception, leading thus, to increased accuracy and decreased reaction time [42]. Hence, it can be suggested that although emotion recognition is not heavily dependent on working memory resources, it is nevertheless, tightly related to visual attentional control which appears to elicit a facilitative effect on performance when cognitive demands are elevated but not overwhelming enough to inhibit performance [41,43-45].

Such a conclusion can also be supported by the fact that our participants were not faster in the longest presentation time (1400 ms) as an outcome of longer exposure to the emotional stimuli. More specifically, and given the seemingly unimpaired accuracy both in the working memory and the emotion recognition task, emotional perception together with memory load appear to demand conscious effort and sustained attention, with the latter not leading to increased reaction speed due to cognitive system overloading which in our experiment was not salient enough to disrupt performance on both tasks [46,47]. Although this conclusion is rather tentative, it is in agreement with the recent findings of Herrmann et al. [23] who used functional near-infrared spectroscopy (fNIRS) and electroencephalography (EEG) in a dual-task paradigm to examine emotional processing interference on WM resources. They found that emotion identification performance was significantly worse in the dual-task condition with a visuo-spatial component (Corsi blocks) compared to the single emotion identification task. Furthermore, the execution of two concurrent tasks was associated with higher activation in the dorsolateral areas of the prefrontal cortex, which according to the authors, could reflect increased WM load.

The impaired accuracy and slower reaction times of fear recognition together with the apparent advantage of happiness is a common finding in emotion recognition studies [48]. This could be attributed to the intense complexity of facial expressions characterizing fear as compared to the less overlapping facial configurations of happiness [49,50]. Moreover, the emotion of happiness has a unique affective value compared to other emotional expressions which are relatively ambiguous and could thus; compete for attentional resources [48]. Another explanation is the negativity bias which describes the overriding allocation of attentional resources to negative cues [51]. This phenomenon has an unequivocal adaptive value and although the processing of impending life-threatening cues is rather automatic [52], efficient fear (and anger, albeit to a lesser extent) recognition occurs at the expense of processing accuracy and speed on concurrent tasks resulting from cognitive interference [18,53- 58].

Finally, one should take under consideration, the fact that an increased sample size would lead to stronger conclusions and great statistical power. It is therefore suggested that the sample size of the present study (N=60) might have played a role in limiting the significance of some of statistical comparisons conducted. In this direction and having in mind that the main goal of the study was to explore the effects of cognitive load, post-hoc sample size calculations were performed. The analyses revealed that on the basis of effect sizes observed (η2 p=0.33), an N of approximately 75 would be needed to obtain statistical power at the recommended 0.80 level [58].

Conclusion

Our ability to recognise basic emotional expressions is crucial for the regulation of behaviour [59] and the development of positive social interactions from a young age [60]. Hence, elucidating the cognitive mechanisms underlying emotion recognition in normally developed individuals is a valuable tool to further understand emotion recognition deficits in atypical populations, such as individuals with frontotemporal dementia [61], schizophrenia [62] and bipolar disorder [63]. Our findings provide relative support for the automatic processing of basic emotions, although the involvement of higher-order processing mechanisms cannot be excluded. The automaticity of basic emotion recognition could be further explained by arguing that in experimental settings where participants view images of emotional faces, human emotion recognition could be primarily driven by low-level perceptual analysis of visual features rather than emotional meaning or affective parameters [48], resulting in fast and efficient processing which might not necessitate the involvement of WM resources.

Taking everything into consideration, and despite the substantial expansion of knowledge regarding the neural mechanisms of emotional processing, it appears that converging operations utilizing neuroimaging, neuropsychological and physiological techniques, particularly in clinical samples, could provide further insight into the perceptual and cognitive processes involved in emotion recognition.

References

- Darwin C. The expression of emotions in man and animals. Murray: London, England. 1872.

- Ekman P. Facial expressions of emotion: New findings, new questions. Psychol Sci. 1992;3:34-8.

- Ekman P. Facial expression and emotion. Am Psychol. 1993;48(4):384-92.

- Ekman P, Friesen WV. Constants across cultures in the face and emotion. J Pers Soc Psychol. 1971;17(2):124-9.

- Shariff AF, Tracy JL. What are emotion expressions for? Curr Dir Psychol Sci. 2011;20:395-9.

- Tracy JL, Randles D, Steckler CM. The nonverbal communication of emotions. Curr Opin Behav Sci. 2015;3:25-30.

- Garcia-Rodriguez B, Ellgring H, Fusari A, et al. The role of interference in identification of emotional facial expressions in normal ageing and dementia. Eur J Cogn Psychol. 2009;21(2):428-44.

- Casares-Guillén C, García-Rodríguez B, Delgado M, et al. Age-related changes in the processing of emotional faces in a dual-task paradigm. Exp Aging Res. 2016;42(2):129-43.

- Kahneman D. Attention and effort. Englewood Cliffs NJ: Prentice-Hall 1973.

- Navon D, Gopher D. On the economy of the human-processing system. Psychol Rev. 1979;86(3):214.

- Carretié L. Exogenous (automatic) attention to emotional stimuli: A review. Cogn Affect Behav Neurosci. 2014;14(4):1228-58.

- Okon-Singer H, Lichtenstein-Vidne L, Cohen N. Dynamic modulation of emotional processing. Biol Psychol. 2013;92(3):480-91.

- Lindström BR, Bohlin G. Threat-relevance impairs executive functions: Negative impact on working memory and response inhibition. Emotion 2012;12(2):384-93.

- Pessoa LL, McKenna MM, Gutierrez EE, et al. Neural processing of emotional faces requires attention. Proc Natl Acad Sci USA. 2002;99(17):11458.

- Phillips LH, Channon S, Tunstall M, et al. The role of working memory in decoding emotions. Emotion 2008;8(2):184-91.

- Xie W, Li H, Ying X, et al. Affective bias in visual working memory is associated with capacity. Cogn Emot. 2016.

- Mikels JA, Reuter-Lorenz PA, Beyer JA, et al. Emotion and working memory: Evidence for domain-specific processes for affective maintenance. Emotion. 2008;8(2):256-66.

- Tracy JL, Robins RW. The automaticity of emotion recognition. Emotion. 2008;8(1):81-95.

- Beneventi H, Bardon R, Ersland L, et al. An fMRI study of working memory for schematic facial expressions. Scand J Psychol. 2007;48(2):81-6.

- Critchley H, Daly E, Phillips M, et al. Explicit and implicit neural mechanisms for processing of social information from facial expressions: A functional magnetic resonance imaging study. Hum Brain Mapp. 2000;9(2):93-105.

- Erk S, Kleczar A, Walter H. Valence-specific regulation effects in a working memory task with emotional context. Neuroimage. 2007;37(2):623-32.

- Hariri AR, Bookheimer SY, Mazziotta JC. Modulating emotional responses: Effects of a neocortical network on the limbic system. Neuroreport. 2000;11(1):43-8.

- Herrmann MJ, Neueder D, Troeller AK, et al. Simultaneous recording of EEG and fNIRS during visuo-spatial and facial expression processing in a dual task paradigm. Int J Psychophysiol. 2016;109:21-8.

- Rama P, Martinkauppi S, Linnankoski I, et al. Working memory of identification of emotional vocal expressions: An fMRI study. Neuroimage. 2001;13(6):1090-101.

- Pessoa L, Padmala S, Morland T. Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. Neuroimage. 2005;28(1):249-55.

- Calvo MG, Lundqvist D. Facial expressions of emotion (KDEF): Identification under different display-duration conditions. Behav Res Methods. 2008;40(1):109-15.

- 27 Getz GE, Shear PK, Strakowski SM. Facial affect recognition deficits in bipolar disorder. J Int Neuropsychol Soc. 2003;9:623-32.

- Tomasik D, Ruthruff E, Allen PA, et al. Nonautomatic emotion perception in a dual-task situation. Psychon Bull Rev. 2009;16(2):282-88.

- Cowan N, Day L, Saults J, et al. The role of verbal output time in the effects of word length on immediate memory. J Mem Lang. 1992;31(1):1-17.

- Mueller ST, Seymour TL, Kieras DE, et al. Theoretical implications of articulatory duration, phonological similarity, and phonological complexity in verbal working memory. J Exp Psychol Learn Mem Cogn. 2003;29(6):1353-80.

- De Fockert JW, Rees G, Frith CD, et al. The role of working memory in visual selective attention. Science. 2001;291:1803-06.

- Lavie N. Distracted and confused? Selective attention under load. Trends Cogn Sci. 2005;9(2):75-82.

- Lavie N, De Fockert JW. The role of working memory in attentional capture. Psychon Bull Rev. 2005;12:669-74.

- Lavie N, Hirst A, De Fockert JW, et al. Load theory of selective attention and cognitive control. J Exp Psychol Gen. 2004;133(3):339-54.

- Bargh JA. The four horsemen of automaticity: Intention, awareness, efficiency and control as separate issues. Handbook of Social Cognition, Lawrence Erlbaum. 1994.

- Pessoa L. To what extent are emotional visual stimuli processed without attention and awareness? Curr Opin Neurobiol. 2005;15(2):188-96.

- Hager JC, Ekman P, Friesen WV. Facial action coding system: The Manual. Salt Lake City, Research Nexus; UT. 2002.

- Wilson MD. The MRC psycholinguistic database: Machine readable dictionary, Version 2. Behavior Research Methods, Instruments & Computers. 1988;20:6-11.

- Bradley MM, Lang PJ. Affective norms for English words (ANEW): Instruction manual and affective ratings. Technical Report C-1. The Center for Research in Psychophysiology, University of Florida. 1999.

- Jalbert A, Neath I, Bireta TJ, et al. When does length cause the word length effect? J Exp Psychol Learn Mem Cogn. 2011;37(2):338-53.

- Kensinger EA, Corkin S. Effect of negative emotional content on working memory and long-term memory. Emotion. 2003;3(4):378-93.

- Erthal FS, de Oliveira L, Mocaiber I, et al. Load-dependent modulation of affective picture processing. Cogn Affect Behav Neurosci. 2005;5(4):388-95.

- Anderson AK, Phelps EA. Lesions of the human amygdala impairs enhanced perception of emotionally salient events. Nature. 2001;411:305-09.

- LeDoux JE. Emotion: Clues from the brain. Annu Rev Psychol. 1995;46(1):209.

- Vuilleumier P, Yang-Ming H. Emotional attention: uncovering the mechanisms of affective biases in perception. Curr Dir Psychol Sci. 2009;18(3):148-52.

- Lim S, Padmala S, Pessoa L. Affective learning modulates spatial competition during low-load attentional conditions. Neuropsychologia. 2008;46(5):1267-78.

- Yates A, Ashwin C, Fox E. Does emotion processing require attention? The effects of fear conditioning and perceptual load. Emotion. 2010;10(6):822-30.

- Nummenmaa L, Calvo MG. Dissociation between recognition and detection advantage for facial expressions: A meta-analysis. Emotion. 2015;15(2):243-56.

- Johnston PJ, Katsikitis M, Carr VJ. A generalised deficit can account for problems in facial emotion recognition in schizophrenia. Biol Psychol. 2001;58(3):203-27.

- Leppänen JM, Tenhunen M, Hietanen JK. Faster choice-reaction times to positive than to negative facial expressions: The role of cognitive and motor processes. J Psychophysiol. 2003;17(3):113-23.

- Taylor SE. Asymmetrical effects of positive and negative events: The mobilization-minimization hypothesis. Psychol Bull. 1991;110(1):67-85.

- Lang PJ, Davis M, Öhman A. Fear and anxiety: Animal models and human cognitive psychophysiology. J Affect Disord. 2000;61(3):137-59.

- Baumeister RF, Bratslavsky E, Finkenauer C, et al. Bad is stronger than good. Rev Gen Psychol. 2001;5(4):323-70.

- Carretié L, Mercado F, Tapia M, et al. Emotion, attention and the 'negativity bias', studied through event-related potentials. Int J Psychophysiol. 2001;41(1):75-85.

- Eastwood JD, Smilek D, Merikle P. Negative facial expression captures attention and disrupts performance. Percept Psychophys. 2003;65(3):352-8.

- Öhman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. J Pers Soc Psychol. 2001;80(3):381-96.

- Pratto F, Bargh JA. Stereotyping based on apparently individuating information: Trait and global components of sex stereotypes under attention overload. J Exp Soc Psychol. 1991;27(1):26-47.

- Cohen J. Statistical power analysis for the behavioral sciences Lawrence Earlbaum Associates. Hillsdale, NJ. 1988;20-26.

- Campos JJ, Thein S, Owen D. A Darwinian legacy to understanding human infancy: Emotional expressions as behavior regulators. Ann N Y Acad Sci. 2003;1000:110-34.

- Izard C, Fine S, Schultz D, et al. Emotion knowledge as a predictor of social behavior and academic competence in children at risk. Psychol Sci. 2001;12(1):18-23.

- Bora E, Velakoulis D, Walterfang M. Meta-analysis of facial emotion recognition in behavioral variant frontotemporal dementia: Comparison with Alzheimer disease and healthy controls. J Geriatr Psychiatry Neurol. 2016;29(4):205-11.

- Corcoran CM, Keilp JG, Kayser J, et al. Emotion recognition deficits as predictors of transition in individuals at clinical high risk for schizophrenia: A neurodevelopmental perspective. Psychol Med. 2015;45(14):2959-73.

- Vierck E, Porter RJ, Joyce PR. Facial recognition deficits as a potential endophenotype in bipolar disorder. Psychiatry Res. 2015;230(1):102-7.