Research Article - Biomedical Research (2018) Volume 29, Issue 16

Study on impulsive assessment of chronic pain correlated expressions in facial images

Ramkumar G* and Logashanmugam E

Department of ECE, Sathyabama Institute of Science and Technology, Chennai, India

- *Corresponding Author:

- Ramkumar G

Department of ECE

Sathyabama Institute of Science and Technology, Tamil Nadu, India

Accepted on August 6, 2018

DOI: 10.4066/biomedicalresearch.29-18-886

Visit for more related articles at Biomedical ResearchAbstract

Pain, pretended to be the quinary decisive presage is an objective sensation instead of subjective and is extensively accepted in health care. The discernible vagaries reflected on the face of a person in pain are for a sparse seconds and comes naturally. To track this is a mysterious and time excruciating process in a clinical framework. This is the reason it is boosting scientists and specialists from medicinal, brain research and PC fields an interdisciplinary research and to emerge with something concrete for the gregarious cause. A useful measure of detecting pain intensity in a clinical framework is obtained by self-report. This technique has limitations as it is based on the subject perception and knowledge and provides no actual timing information. An endeavour has been made to code pain as a progression of facial action units (AUs) that can accomplish a target measure of pain. Utilizing FACS and acquiring self-governing datasets the system’s execution is tried for ground truth. The proposed strategy compares the previous class approaches like SVM and ANFIS in pain classification on the UNBC-McMaster shoulder pain expression database.

Keywords

Face expression, Pain classification, DWT, AAM, ANFIS.

Introduction

Pain expressions, specifically, should be of incredible significance for social co-operations and for the correspondence of danger and sorrow [1]. Moreover, pain faces get lifted cortical handling contrasted with other facial expressions [2,3], which focus at an uncommon pertinence of facial pain expressions. Pain is an advanced multidimensional idea that is hard to characterize. Individual pain encounters impact intellectual, enthusiastic, and behavioral reactions. Pain assessment is basically an important test for people with severely ill, the individuals who don't report their pain level. In basic care numerous components may change oral correspondence including tracheal intubation, diminished level of awareness, use of limitations, sedation, and deadening medications [4]. These patients will probably get deficient analgesics than the individuals who can impart, however also inclined to experience difficult diseases. The prerequisite for perfect pain evaluation in grown-up basic care settings is fundamental since it has been represented that 35% to 55% attendants underrate the patient’s pain and in one survey, 63.6% of patients did not get any pharmaceuticals previously during painful methods [5].

In expansion, unconscious or quieted patients cannot confabulate their zone of pain making utilization of numeric pain rating scales (NRS) (0-10) and are along these lines in risk for being insufficiently cured for pain. Inaccurate pain evaluations and offering ascend to pitiful treatment of pain in critically sick grown-ups can prompt critical physiologic outcomes, for example increases myocardial workload which can prompt myocardial ischemia, or weakens gas uproot causing a course of occasions which can prompt pneumonia [4,5]. The initial step in giving sufficient help with discomfort to patients is deliberate and reliable assessment and documentation of pain. Acknowledgment of the perfect pain scales for non-garrulous patients have been the focal point of various examinations. To date, though, no one pain assessment tool is generally endorsed for use in the non-informative patient. A typical part of behavioral pain devices is estimation of facial activities. Notwithstanding utilization of facial expression is an essential correspondence scope of pain intensity, limited and precise strategies for translating the facial expression of pain has not been systematically estimated in fundamentally sick, non-informative patients [6]. The face reports an abundance of data about human activities. The most commonly utilized pain behavior in pain estimation scales for patients who can't orally impart is facial expression. Facial expressions outfit a critical activity measure for the investigation of feeling, subjective procedures, and social collaboration. The utilization of facial expression likewise has a huge capacity of pain strengthening, however exact and precise strategies for translating facial expressions of pain in non-informative critically sick grown-ups has not been recognized. Facial expression in pain facial representation specific to pain has been gotten the hang of utilizing the Facial Action Coding System (FACS). These contain brought down brows, raised cheeks, fixed eyelids, an outstretched upper lip or opened mouth, and shut eyes [7].

Dataset

Facial expression analysis has received expanding consideration over the previous decade. Thus, numerous freely accessible datasets endure that encourage the investigation of facial expressions and basically concede the prevailing emotional moods and measurements [8]. The most broadly utilized dataset is the UNBC-McMaster Shoulder Pain Expression Archive Database. It comprises of two hundred videos inscribed with twenty five members experiencing shoulder pain. The members experienced a progression of dynamic and uninvolved scope of-movement analysis with their influenced and guileless limbs. The dataset likewise incorporates self-disclose and viewer calculates the pain severity at video grouping level. In addition, at outline matching it gives FACS expressing in code and facial specimens.

Pain expression approaches

Maximum works are carried out in UNBC-McMaster dataset, some utilization the BioVid database. Different methodologies are tried on non-public data set, which makes it difficult to compare with their outcomes or duplicate them [9]. Numerous works have afforded on pain detection. However, there is an inclination towards the testing assignment of pain intensity evaluation [10]. Different authors group certifiable versus falsified pain or pain versus primitive feelings [11].

Proposed System

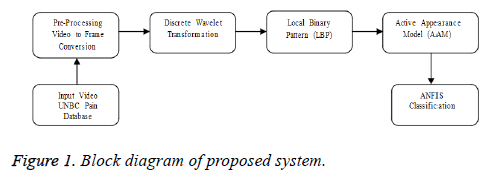

Figure 1 shows the block diagram of prospective architecture, which demonstrates the entire process for arranging pain video grouping utilized in this endeavour. The video successions are appropriated from the UNBC-McMaster Shoulder Pain dataset [12].

Discrete wavelet transformation

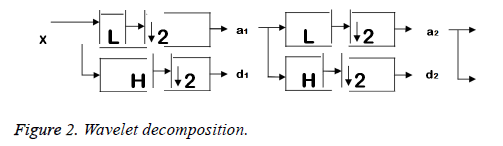

The discrete wavelet transform (DWT) is a direct change which brings off on a data bearing to which length is whole number energy of twice, changing it into a fractionally extraordinary bearing of a similar length [13]. This process used to isolates information toward various frequency sections, and next considers every segment with resolution coordinated to its range. Discrete wavelet transform is counted with rapids of filtering displaced by an aspect 2 sub sampling (Figure 2).

Filter output is given in the below mentioned Equations 1 and 2.

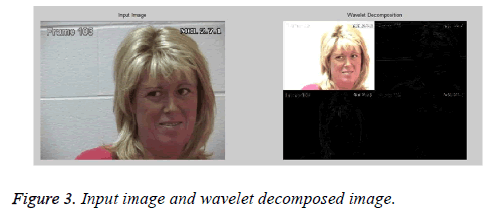

Components aj starts the next step of the transform and components dj finalizes the output of the transform. Coefficient of low-pass filters is l [n] and coefficient of highpass filters is h [n]. By utilizing DWT, the image is deteriorated towards similarity and detail sections explicitly in all the sub bands, interrelated to comparative, horizontal, vertical, and diagonal appearance respectively. Wavelets exchange the image into a growth of wavelets that can be put away more generatively than pixel squares. Wavelets have bleak edges, they can furnish pictures finer by dispensing the blockness. In DWT, a span portrayal of the computerized signal is acquired utilizing digital filtering systems. The signal to be broke down is gone through channels with various cut-off frequencies at various scales. It is anything but difficult to actualize and diminishes the calculation time and assets required. Generally, 1D DWT has a gratification more than 2D DWT concerning face recognition since it associates twain worldwide portrayal and even edge subtle elements (Figure 3). Hence we apply 1D DWT for a given input image.

Face detection

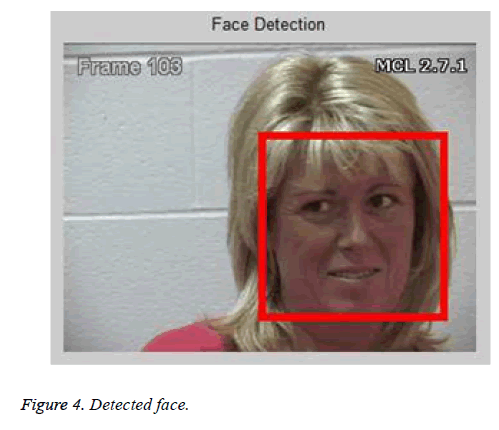

Viola Jones technique is utilized for face detection. The essential standard of the Viola Jones technique is to check a sub-bay having the goods of identifying faces over an inclined input image (Figure 4). The regular image processing methodology is rescaling the given input image to various ranges and after that bound the settled size locator through the particular images. This system ends up being fairly time using because of the estimation of the various ranges images. Conflicting to the standard interest Viola Jones scale up the detector rather than the input image and run the locator commonly over the image each time with an alternate range. At beginning one may acknowledge the two proposals to be also time utilized, however Viola Jones have begun a scale invariant identifier that desires the comparable sum of counts whatever the range. This indicator is produced utilizing a so entitled integral image and part of basic rectangular features reminiscent of Haar wavelets [14].

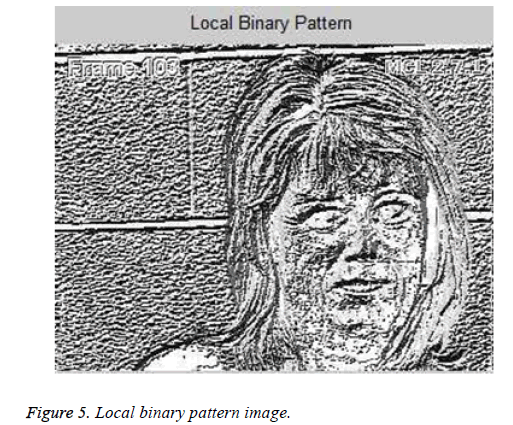

LBP based feature extraction

Expelling successful facial highlights from novel face images is a major capacity for strong facial expression recognition. LBP remains for Local binary pattern and it has various applications like images recuperation, remote appraisal, medicinal picture investigation, face image examination, movement investigation and condition demonstrating. For each pixel in the image, binary code by thresholding the pixel esteem in association with the central pixel esteem is resolved settled finally, a histogram is made to accumulate the diverse twofold examples [15].

The LBP controller prospective in [16] a gray-scale and turn invariant surface administrator set up on local binary patterns. By paralleling the gray scale estimation of pixel with the gray scale code of the nearby neighbor’s pixels organized in a round well-formed closeness, an LBP administrator can be invariant to the gray scale varieties. Pivot invariance is accomplished by choosing the settled course of action of revolution invariant examples that the LBP administrator joins. For a 3 × 3 image pixel, the focal pixel is contrasted and adjacent pixels. In case the estimation of a neighbor pixel is more unmistakable than the focal pixel it is encoded with 1, else with 0. For each pixel, the LBP binary code is recuperated by connecting all the above mentioned parallel qualities a clockwise way, which outsets from its upper left neighbor. In the estimation of the LBP histogram, each uniform example is doled out to an alternate bin and all non-uniform examples allocated to a solitary bin in the element histogram [17]. Face images can be considered as an association of nearby little squares which can be effectively represented by the LBP histograms. With a specific end goal to think about spatial data of face images, a face image was routinely separated into m small blocks to separate LBP histograms. The LBP histograms isolated from each square are interlocked into a last spatially enhanced part histogram. For each square, a histogram of LBP codes is processed selfrulingly. By then, the consequent m histograms are associated with frame a worldwide histogram grouping. This LBP histogram incorporates data concerning the scattering of the nearby miniaturized scale designs, for instance edges, spots and level regions, over the entire image (Figure 5). The rule thought of the proposed figuring is to upgrade or weaken the commitment of features shown by their discriminative centrality in expression classification.

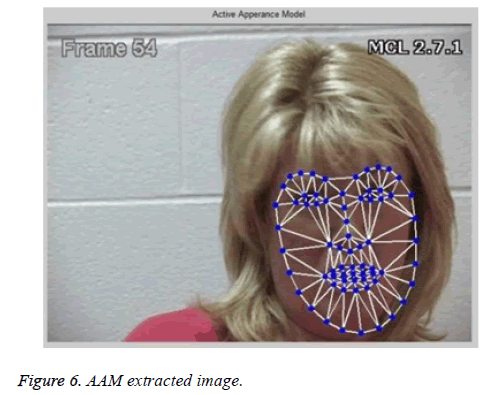

Active appearance model

In machine learning, the innumerable portrayal is confessing to impact acknowledgment execution. Active appearance models (AAMs) give a moderate measurable portrayal of the structure and appearance assortment of the face as assessed in 2D images [18,19]. This portrayal separates the structure and appearance of a face image. Given a pre-portrayed direct structure model with linear appearance variety, AAMs alter the shape model to a subtle image accommodating the face and facial expression of intrigue. By and large, AAMs fit their figure and appearance segments by means of a gradient descent search, albeit streamlining strategies have been utilized with comparative results [20].

The shape s of an AAM is portrayed by a 2D triangulated work. In intrinsic, the direction of the work vertices characterizes the shapes (Figure 6) [19].

These vertex areas identifies with a source appearance picture, from which the shape is adjusted. Since AAMs permit direct shape variety, the shape s can be communicated as a base shape s0 in addition to a straight blend of m shape vectors si.

where the coefficients p=(p1......p m)T are the shape constraints. These shape constraints are ordinarily separated into closeness constraints ps and object-particular constraints po, such that pT=(ps T, po T).

We will allude to ps and po in this as the unbending and noninflexible shape vectors of the face, separately. Inflexible constraints are mixed with the geometric similitude change. Non-inflexible parameters are related with lingering shape varieties, for example, mouth opening, eyes closing, and so forth. Procrustes alignment [18] is utilized to appraise the base shape s0. When we have evaluated the base shape and shape parameters, we can standardize for different factors to accomplish diverse portrayals like rigid normalized shape, rigid normalized appearance, non-rigid normalized appearance.

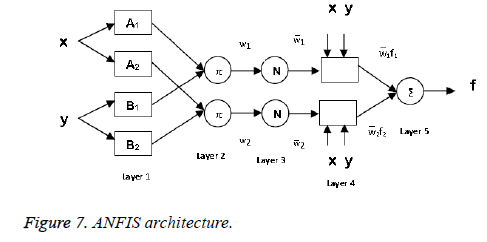

ANFIS classification

Adaptive neuro fuzzy inference system is the mix of best highlights of fuzzy inference system and artificial neural network [21]. ANFIS fill in as a reason for building an arrangement of fuzzy if-then rules proper enrolment capacities to produce the stipulated input-yield sets (Figure 7). This is a fuzzy inference system with a back propagation that endeavors to lessen the mistake and enhances the execution. The fuzzy inference system incorporated the GUI parameter which is utilized for managing this framework. The four parameters are said beneath: 1. Fuzzy Inference System (FIS) Editor handles information and yield factors. 2. Membership Function Editor plots the figures of all factor participation capacities 3. Rule Editor is utilized for altering the rundown of guidelines in framework. 4. Rule viewers utilized for observing the framework and furthermore to help in analyse the conduct of particular principles [22].

Layer 1: Every node in layer 1 is an adaptation node. The outputs of layer 1 are the fuzzy membership grades of the inputs, which are given by:

where, x and y are the inputs to node i, and Ai and Bi is a linguistic label and μAi (x) and μBi-2 (y) can adopt any fuzzy membership function. Usually, μAi (x) can be selected such as:

where, ai, bi and ci are the constraints of the enrolment bellshape work.

Layer 2: The hubs of this layer are named M, showing that they execute as a basic multiplier. The yields of this layer can be spoken to as:

Layer 3: It contains settled hubs that figure the proportion of the terminating qualities of the firing strengths of the rules as follows:

Layer 4: In this layer, the hubs are versatile hubs. The yields of this layer are figured by the recipe inclined underneath:

where, ![]() is a standardized terminating quality from layer 3.

is a standardized terminating quality from layer 3.

Layer 5: The hub plays out the summation of every single approaching sign. Consequently, the general yield of the model is given by:

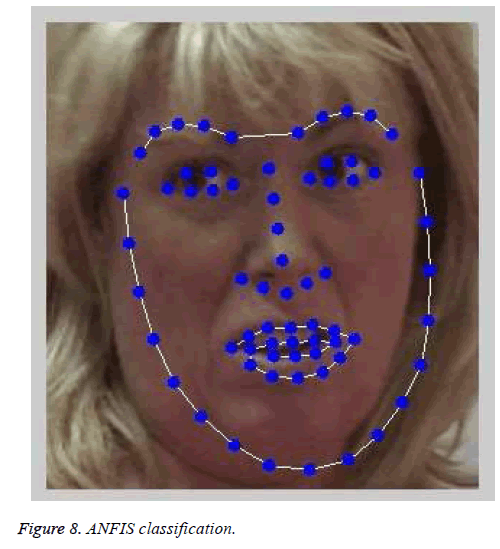

It is apparent that there exist two versatile layers in this ANFIS design, specifically the primary layer and the fourth layer. In the main layer, there are three modifiable constraints (ai bi ci), which are pertinent to the information participation capacities. These parameters are generally named as introduce parameters. In the fourth layer, there now are additionally three modifiable constraints (pi qi ri) relating to the main request polynomial. These constraints are the purported subsequent parameters (Figure 8).

To begin ANFIS seeing, first it is basic to have trained informational collection that rejects wanted input/output information sets of the objective framework to be displayed, and here and there a discretionary checking informational collection that can check the speculation capacity of the subsequent fuzzy inference system. The input FIS matrix to ANFIS contains the structure which indicates number of principles in the FIS, number of enrolment capacities for each information and constraints [23-25].

Experimental Results

For every one of the outcomes in this area, a part of the UNBC database was extracted. Since the database is vast yet a large number of the edges are fundamentally the same as it is a video stream, utilizing each casing is superfluous; moreover, it extraordinarily expands the calculation time (Figure 9).

ANFIS information given below:

Total number of nodes used: 24

Number of linear constraints: 10

Number of nonlinear constraints: 15

Total number of constraints: 25

Number of training data pairs: 958

Number of checking data pairs: 0

Number of fuzzy rules: 5

Performance Comparison

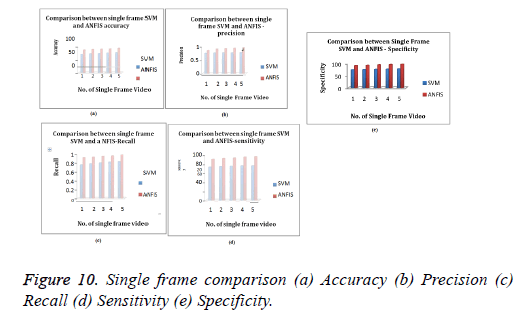

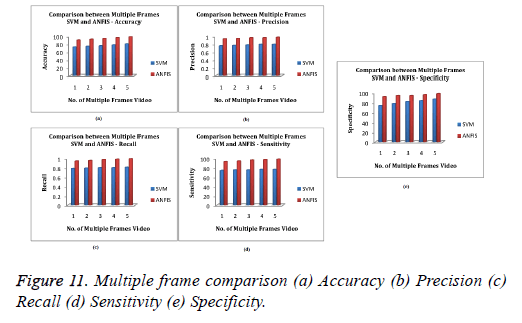

Here we were showing the comparison of the classifiers performance by the graphical Representation. Have including the Single frame and multiple frames for SVM classifier and ANFIS classifier. According to that we classified the each frames accuracy, precision, recall, sensitivity and specificity as below graphical representation (Tables 1 and 2 and Figures 10 and 11).

| Description | Single Frame SVM and ANFIS Parameters | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | Sensitivity | Specificity | ||||||

| SVM | ANFIS | SVM | ANFIS | SVM | ANFIS | SVM | ANFIS | SVM | ANFIS | |

| Frame 1 | 70 | 87 | 0.75 | 0.86 | 0.75 | 0.91 | 73 | 90 | 73 | 91 |

| Frame 2 | 72 | 89 | 0.76 | 0.91 | 0.77 | 0.92 | 74 | 92 | 74 | 92 |

| Frame 3 | 73 | 90 | 0.77 | 0.93 | 0.79 | 0.94 | 75 | 93 | 75 | 94 |

| Frame 4 | 75 | 91 | 0.77 | 0.94 | 0.81 | 0.95 | 76 | 95 | 76 | 95 |

| Frame 5 | 77 | 94 | 0.78 | 0.96 | 0.82 | 0.97 | 76 | 96 | 77 | 96 |

Table 1. Comparison between single frame SVM and ANFIS classifier

| Description | Multiple frames SVM and ANFIS parameters | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | Sensitivity | Specificity | ||||||

| SVM | ANFI S | SVM | ANFIS | SVM | ANFIS | SVM | ANF IS | SVM | ANFIS | |

| Frame 1 | 72 | 90 | 0.75 | 0.93 | 0.78 | 0.94 | 74 | 93 | 74 | 92 |

| Frame 2 | 74 | 92 | 0.76 | 0.94 | 0.79 | 0.95 | 75 | 94 | 78 | 94 |

| Frame 3 | 75 | 94 | 0.77 | 0.96 | 0.8 | 0.97 | 75 | 96 | 82 | 94 |

| Frame 4 | 77 | 96 | 0.79 | 0.96 | 0.8 | 0.98 | 76 | 97 | 84 | 96 |

| Frame 5 | 80 | 98 | 0.79 | 0.97 | 0.81 | 0.99 | 76 | 98 | 87 | 98 |

Table 2. Comparison between multiple frames SVM and ANFIS classifier.

Thus the finding and possibility of these expressions has been addressed using ANFIS classifier with overcome the SVM.

Conclusion

This paper lays the groundwork for novel pain expression classification method. In Pain expression identification, the feature extraction assumes an essential part in the undertaking of characterization. In practice, feature selection is to a great extent subordinate classifiers and the classification problem. Another factor that progresses the face images is enlightenment. So the light invariant highlights utilized the element descriptor s in view of weighted DWT and LBP, the feature extraction based on AAM and the classifier in the view of ANFI S is proposed. AAM based features with ANFIS classifiers to order pain versus no pain not beyond the aforementioned UNBC McMaster dataset, bringing about a region covered by ROC (AUC) score of 93.9% and a true positive hit rate of 92.4% correspondingly. Experiment result s on database determines that ANFIS classification is more booming and dynamic than SVM classifiers for pain expression recognition. The results of this analysis show that the elaboration of an automatic pain assessment system situated on facial expression analysis has promising clinical application.

References

- Lucey P. Automatically detecting pain in video through facial action units. IEEE Trans Sys Man Cybern Part B 2011; 41: 664-674.

- Zhang J, Liu M, Le An YG, Shen D. Alzheimers disease diagnosis using landmark-based features from longitudinal structural MR images. IEEE J Biomed Health Inform 2017; 21: 1607-1616.

- Zhang K, Huang Y, Du Y, Wang L. Facial expression recognition based on deep evolutional spatial-temporal networks. IEEE Trans Image Proc 2017; 26: 4193-4203.

- Ma X, Song H, Qian X. Robust framework of single-frame face super resolution across head pose, facial expression, and illumination variations. IEEE Trans Human Mac Sys 2015; 45: 238-250.

- Starzyk JA, Graham J, Puzio L. Needs, pains, and motivations in autonomous agents. IEEE Trans Neur Netw Learn Sys 2017; 28: 2528-2540.

- Adjei T, Von Rosenberg W, Goverdovsky V, Powezka K, Jaffer U, Mandic DP. Pain prediction from ECG in vascular surgery. IEEE J Transl Eng Health Med 2017; 5: 1-10.

- Liu M, Shan S, Wang R, Chen X. Learning expression lets via universal manifold model for dynamic facial expression recognition. IEEE Trans Image Proc 2016; 25: 5920-5932.

- Yuanyuan D. Facial expression recognition from image sequence based on LBP and Taylor expansion. Spec Sect Sequent Data Model Emerg Appl 2017; 5: 2169-3536.

- Ren J, Jiang X, Yuan J. A chi-squared-transformed subspace of LBP histogram for visual recognition. IEEE Trans Image Proc 2015; 24: 1893-1904.

- An L, Kafai M, Bhanu B. Dynamic Bayesian network for unconstrained face recognition in surveillance camera networks. IEEE J Emerg Select Top Circ Sys 2013; 3: 155-164.

- Rizwan AK, Alexandre M, Hubert K, Saida B. Framework for reliable, real time facial expression recognition for low resolution images. Patt Recogn Lett 2013; 345: 1159-1168.

- Werner P, Al-Hamadi A, Limbrecht-Ecklundt K, Walter S, Gruss S, Traue HC. Automatic pain assessment with facial activity descriptors. IEEE Trans Affect Comp 2017; 8: 286-299.

- Kim J. Discrete wavelet transform-based feature extraction of experimental voltage signal for Li-ion cell consistency. IEEE Trans Vehic Technol 2016; 65: 1150-1161.

- Murphy TM, Broussard R, Schultz R, Rakvic R, Ngo H. Face detection with a Viola-Jones based hybrid network. IET Biometrics 2017; 6: 200-210.

- Ding Y, Zhao Q, Li B, Yuan X. Facial expression recognition from image sequence based on LBP and Taylor expansion. IEEE Access 2017; 5: 19409-19419.

- Werghi N, Tortorici C, Berretti S, Del Bimbo A. Boosting 3D LBP-based face recognition by fusing shape and texture descriptors on the mesh. IEEE Trans Info Forens Secur 2016; 11: 964-979.

- Jung JY, Kim SW, Yoo CH, Park WJ, Ko SJ. LBP-ferns-based feature extraction for robust facial recognition. IEEE Trans Cons Electron 2016; 62: 446-453.

- Jin C. 3D fast automatic segmentation of kidney based on modified AAM and random forest. IEEE Trans Med Imag 2016; 35; 1395-1407.

- Lucey P. Automatically detecting pain in video through facial action units. IEEE Trans Sys Man Cybern Part B 2011; 41: 664-674.

- Lee HS, Kim D. Tensor-based AAM with continuous variation estimation: application to variation-robust face recognition. IEEE Trans Patt Anal Mac Intell 2009; 31: 1102-1116.

- Zhang Y, Chai T, Wang H, Chen X, Su CY. An improved estimation method for unmodeled dynamics based on ANFIS and its application to controller design. IEEE Trans Fuzzy Sys 2013; 21: 989-1005.

- Chong SS, Abdul Raman AAB, Harun SW, Arof H. Dye concentrations measurement using Mach-Zehner interferometer sensor and modeled by AN FIS. IEEE Sens J 2016; 16: 8044-8050.

- Quan TM, Jeong WK. A fast discrete wavelet transform using hybrid parallelism on GPUs. IEEE Trans Parall Distr Sys 2016; 27: 3088-3100.

- Ahmed AH, Jun S, Rami AH, Jun Y. Modelling and simulation of an Adaptive Neuro-Fuzzy Inference System (ANFIS) for mobile learning. IEEE Trans Learn Technol 2012.

- Sharma M. Artificial Neural Network Fuzzy Inference System (ANFIS) for brain tumor detection. arXiv 2012.