Research Article - Neurophysiology Research (2018) Neurophysiology Research (Special Issue 1-2018)

Musical emotions in the brain-a neurophysiological study.

Patrícia Gomes, Telmo Pereira*, Jorge CondeDepartment of Clinical Physiology, Coimbra Health School, Polytechnic Institute of Coimbra, Coimbra, Portugal

- *Corresponding Author:

- Telmo Pereira

Department of Clinical Physiology

Polytechnic Institute of Coimbra

Coimbra

Portugal

Tel: (+351) 965258956

E-mail: telmo@estescoimbra.pt

Accepted date: February 03, 2018

Citation: Gomes P, Pereira T, Conde J. Musical emotions in the brain-a neurophysiological study. Neurophysiol Res. 2018;1(1);12-20

Abstract

Introduction: Music has accompanied our evolution ever since the dawn of mankind. This project was designed to bridge the connection between music and emotions in our brain. Objectives: The purpose of this analysis is to relate the emotions felt by the participants to the recording of brain electrical activity simultaneously. The article will report the activation of the EEG rhythm bands in our brain and draw conclusions about the areas most affected by music and the emotions mentioned by the participants. Methods: Thirty college students were monitored with EEG recording while subjected to the audition of different excerpts of music, each one associated with a different emotion: joy, sadness, fear and anger. The evoked emotions were evaluated through behavioural ratings and the EEG was recorded with an electrode cap in order to collect electroencephalography data in 13 channels with reference to the mastoids. The findings were fully analysed and compared to their rest recordings. Results: The predominance of left hemisphere activation with pleasant feelings and right activation with unpleasant ones was notorious. The excerpt that transmitted positive valence emotions (joy) demonstrated asymmetry in the alpha band predominantly in the left central and parietal lobe while the excerpts that induced unpleasant emotions (sadness and fear) were associated with an increased coherent activity towards the right frontal-temporal regions. Plus, there was an association between emotions that provoke a behavioural approach (joy and anger) and the left-sided areas of the brain and between the emotions that cause withdrawal behaviour (sadness and fear) and the right hemisphere. Conclusion: This investigation led to the recruitment of several networks in the brain electrical activity through the EEG involved in the processing of music, transporting us into the emotions’ world. We conclude that there is a noticeable relation between the music and the emotions felt, having great influence in the patterns of cerebral activation.

Keywords

Electroencephalography, Music, Brain, Emotion

Introduction

The word "music", Greek´s origin, had primarily a broader meaning than the present one. Techne (technique, art) musikê (muses) was all culture of art and soul education, it was linked to the social life of the Greek people, to their festivals, religion, their cultural manifestations and in this way music managed to reach a high degree of development. However, few were the "sound documents" of this period that have reached our days, only texts that related the high degree that reached then this art in Ancient Greece.

We often hear sentences such as "this song is sad", "this song makes me want to dance" or even "this melody made me shiver", which indirectly are arguments that music provokes emotions/ reactions in people. However, we cannot say that all the songs provoke the same reaction in every person.

The way sound waves are pronounced and heard has an impact on the behaviour of the neurological system. Different cortical structures are involved in the processing of this information, such as the auditory, frontal and motor cortex [1]. Several frontal regions are known to be involved in music processing, such as the motor and premotor cortex in rhythm processing [2], and the middle frontal gyrus in musical mode and time processing [3]. In general, the medial prefrontal cortex is strongly associated with emotional processing [4].

The humans’ ability to create and enjoy music is a universal trait and plays an essential role in the daily life of most cultures. Music has a unique ability to activate memories, arouse emotions and intensify our social experiences. There are already studies that intend to represent the mental state caused by the music with the impact that it has in our neurophysiological system. The levels of brain excitation then create a bridge between the world of mind and music and are related to parameters such as pitch, rhythm and frequency.

In the last decades, the advances of Neuroscience have given the possibility of a greater comprehension about the relations between music and the nervous system. Techniques such as magnetic resonance imaging (MRI) have enabled, for example, the verification of different volumes of specific brain structures such as the corpus callosum, motor cortex and cerebellum, when comparing trained musicians and staff with no musical training [5]. In that case, many may argue about the Neuroplastic effects resulting from musical training. Studies with functional MRI have enabled the establishment of correlations between certain brain areas and functions, musical abilities or sound processing. For example, Robert Zatorre [6], a pioneer in neuroscience and music studies, established, for example, the right hemisphere’s role in music processing detailing auditory and musical information processing performed by the auditory cortex, they focused on musical perception and production, that is, on the interaction of audio and motor functions.

Studies on the neural basis of music have also used techniques such as electroencephalography (EEG) and the analysis of evoked potentials (EP) as a way of understanding the temporal aspects related to the processing of musical information. EEG is a technique that allows to measure and determine with high temporal precision the alterations caused by a certain tasks [7]. It is commonly used in experiments that investigate the auditory detection of dissonances, diminishes expectation in music detection of errors in melodies [8,9].

The analysis of the EP allows us to understand if the brain has a greater or lesser facility in detecting dissonances when compared to consonances. This type of research leads to discussions about the biological component of melodic constructions. With this, the analysis is done by means of the potentials or components, which are named according to the time frame in which they appear, and the polarity (negative or positive). EEG has been widely used in studies that seek to understand the similarities and differences between musical and verbal processing. As an example of this technique, Koelsch et al. have shown that the N400 component, which is typically related to the semantic processing of verbal language, is similarly encountered during the processing of non verbal (musical) semantic information. With this, the relationship between basic aspects of verbal and musical information processing is narrowed.

Studies have been done with different types of music: those which evoke positive emotions, such as joy, and those which, on the contrary, evoke negative emotions such as sadness or nostalgia. The contrast between the frequency of cerebral activation and the triggered anatomical areas is notorious, allowing to differentiate the emotional effect of each music. Each type also has its effects on body rhythms (not only on electrical brain activation but also on respiratory rate, heart rate, and so on), so selecting the right music is important for the modulation of the cortical electrical signal through different intensities and styles of music [10].

Further consistency among researchers’ studies includes the hemispheric lateralization of functions related to emotions, as delivered by a great body of neuroimaging and clinical studies making frontal [11-13] or global lateralization a subject of discussion [14]. Earlier studies have reported that an EEG index, frontal alpha asymmetry, is related to emotions and approach/withdrawal motivational processes [15-17]. Altenmuller et al. found that, when comparing the EEG activity of emotional processing of complex auditory stimuli using emotional excerpts and environmental sounds, there is a general bilateral fronto-temporal activation, a left temporal activation that is increased by positive emotional music and a right frontotemporal activation improved by negative emotional ones [18]. In fact, music-related studies using EEG have provided evidence indicating that the right frontal brain region preferably contributes to arousal and negatively valenced emotions, whereas the left one to positively valenced emotions [18,19]. Alpha asymmetry in temporal [20,21] and parietal channels [22,23] was also reported to reflect emotional valence.

The purpose of this analysis is to relate the emotions felt by the participants to the recording of brain electrical activity simultaneously. The article will report the activation of the EEG rhythm bands in our brain and draw conclusions about the areas most affected by a particular type of music and the emotions mentioned by the participants themselves.

Materials and Methods

This investigation intended to evaluate the response of the brain’s electric activity to different excerpts of music, in healthy university students, naïve in terms of musical theory. The sample of this study consists of 30 young men and women, aged between 18 and 25 years, healthy and deprived of any medication. All participants were informed about the project and they read and signed an informed consent form prior to the collection.

Eight musical excepts with one minute of length were chosen to induce the different emotions in the participants: joy (Serenade in G major, k-525 Eine Kleine Nachtmusik and Sonata in A major Kv 331, both Mozart's), sadness (Adagio for strings, Samuel Barber and Adagio for organ and strings in G minor, Albinoni), fear (Throne for the Victims of Hiroshima by Krzysztof Penderecky and Moments of Terror by Manfredini) and anger (Gustav Holst's Mars, the bringer of war and Igor Stravinsky's The Rite of Spring). All of these have been used in previous studies of this kind, so, their differences in terms of rhythm, frequency, pitch and musical tone have been thoroughly described [24].

A pre-study was performed in order to test the affective dimensions of each of the selected musical excerpts. For this, 35 volunteers were asked to rate the musical excerpts after hearing each one. A questionnaire was used for the affective ratings, in which the participants had to choose from among 26 adjectives which would best describe how they felt during the music and with what intensity. These adjectives are grouped as follows: Joy-happy, joyful, content, excited, fun, loving, proud, Sadnessdiscontented, heartbroken, depressed, sad, discouraged; Fearfearful, scared, terrified, tense, nervous, anxious, worried; Anger-enraged, angry, furious, wrathful, raging and choleric. The themes were played randomly, not having the same intended emotion repeated twice in a row. Even though the volunteers listened to all of these musical excerpts, only those that showed a clearly identified emotional quality at a high intensity level were included in the experimental tasks. The ones chosen for investigation were: Piano Sonata no. 11 in A, K. 331, Mov. 3 of Mozart for joy, Adagio for Strings of Samuel Barber for sadness, Mars, the Bringer War of Gustav Holst for fear and The rite of Spring of Igor Stravinsky for anger.

The research took place in the Labinsaúde laboratory, Coimbra Health School. The materials used to perform the experiments were an adult nylon electrode cap system (CAP100C, BIOPAC® Systems, Inc. USA) and shell electrodes for the mastoid (which served as reference), headsets, two computers and three monitors, two of them with split signal. During collection the laboratory was in low light and quiet for better concentration and lower signal contamination. The participant sat comfortably in a chair in front of the monitor. The participants of this project were identified by an arbitrary code for the sake of anonymity. The minimum requirements for performing this examination were set onward in the guidelines of the American Clinical Neurophysiology Society.

Procedure

Regarding the EEG monitoring 13 passive electrodes were positioned at F4-F3, C4-C3, P4-P5, F8-F7, T4-T3, T6-T5 and Cz, according to the international 10-20 system, referenced to the mastoids. The experiment started when the participant was monitored and comfortably seated, with the whole room in the ideal conditions.

The presentation made for this study began with a brief text explaining to the participants what they would have to do throughout it. When listening to the songs they would be with their eyes closed and then open them to answer the questions placed on the slides. Initially, a collection of one minute of basal EEG without any music was performed, and after a short pause a slide was presented, without music, with slight tonalities in order to reset the brain. Then, the examination was started with a brief explanation of each young person's participation and how they would have to answer the questions raised about their emotions and their intensity. The four types of music representing the emotions with high intensity were recorded in WAV files lasting approximately one minute. The experience was mounted on the Superlab 4.5 (Cedrus® Corporation, USA) with the excerpts reproduced at random and inter-stimulus intervals of 30 seconds. The EEG acquisition was triggered at the beginning of the presentation of each music, and was continuously recorded until its end. All the data was compiled in a digital datasheet for subsequent statistical analysis. All aspects of presentation were managed with the SuperLab 4.5 (Cedrus® Corporation, USA), which also triggered the recording of EEG data through a StimTracker (Cedrus® Corporation, USA) connected to the MP150 platform (BIOPAC® Systems, Inc., USA).

After each stimulus presentation, the participants rated the musics in terms of emotional quality (joy, sadness, fear or anger), emotional intensity (low, moderate or high) and personal taste about the music (I like little, I like moderately, I like it a lot). The answers were digitally entered through a response pad. This program automatically registered the behavioural responses of the participants in .txt files. All stimuli were synchronized with the BIOPAC MP150 platform through a StimTracker (Cedrus® Corporation, USA), with continuous recording of the physiological variables during each song.

EEG processing

The responses given by participants were automatically recorded and then put together in a database. The EEG tracings were filtered using a 0.5 Hz high pass filter and a 35 Hz low pass notch filter, through which a 50Hz notch filter was in place. Impedances were checked with an electrode impedance checker (EL-CHECK, BIOPAC® Systems, Inc. USA), and kept below 5 kΩ. An MP150 platform with EEG100C Amplifiers running through the AcqKnowledge 4.4 software was used (BIOPAC® Systems, Inc. USA). Untampered EEG data was imported into EEGLAB v.13.6.5b, an open source toolbox running under Matlab R2015a (The MathWorks Inc.), as an EDF file. A sampling rate of 256Hz was applied to the raw EEG data and it was band-pass filtered at 1-45Hz and re-referenced to an average reference. For ICA, it was used the ‘runica’ algorithm with default parameters implemented in EEGLAB. ICs that didn’t correspond to cortical sources, such as eye blinks, lateral eye movement, muscle activity or cardiac artifacts were excluded from further analyses. The spectrogram was then separated into the five typical frequency bands, namely delta (0.5-4 Hz), theta (4-8 Hz), alpha (8-13 Hz), beta (13-30 Hz) and gama (30-45 Hz). The plot was made by the spectopo function, which is a part of EEGlab toolbox and displays power spectral density from the EEG data. The raw EEG power values (in dB) were log-transformed to normalize the distribution.

Statistical Analysis

The statistical analysis was performed with the SPSS software, version 21 for Windows (IBM, USA). A simple descriptive analysis was used to characterize the study population and to evaluate the distribution of the continuous and categorical variables. The continuous variables are represented as mean value ± standard deviation. Repeated-measures ANOVAs were conducted on the various measures considered. Factorial analysis was performed taking the factor Moment (two levels: baseline and music) and the factor EEG Channel (thirteen levels, corresponding to the EEG channels). The Greenhouse- Geisser correction was used when sphericity was violated, and the Bonferroni adjustment was adopted for multiple comparisons designed to locate the significant effects of a factor. The criterion for statistical significance was p ≤ 0.05, and a criterion of p between 0.1 and 0.05 was adopted as indicative of marginal effects.

Results

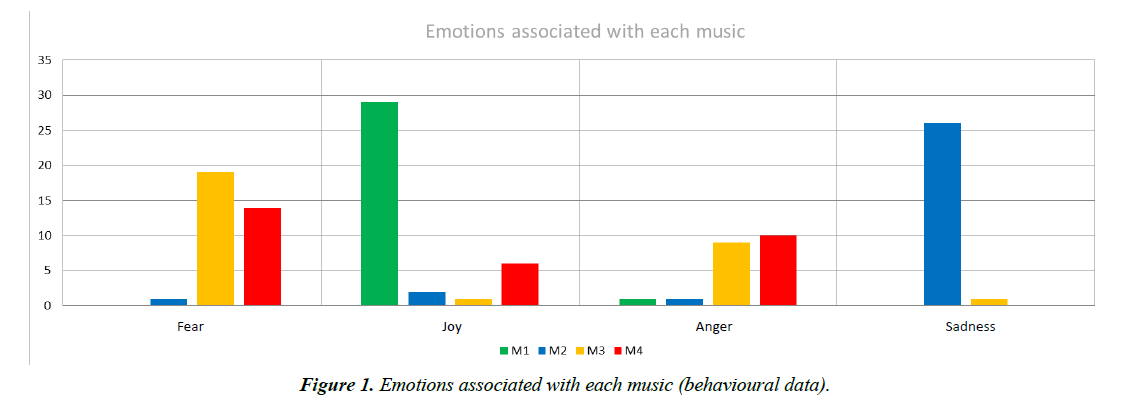

The analysis of the behavioural responses in the classification of the emotional quality and intensity of the presented songs allowed us to identify 4 songs as representative of 4 different emotional qualities with high levels of intensity, as reported by the participants in the pre-study: Music 1-Joy; Music 2-Sadness; Music 3-Fear; Music 4-Anger.

Music 1 is an excerpt with 55 seconds from Piano Sonata no. 11 in A, K. 331, Mov. 3, by Mozart. It was selected to induce joy because it presents relatively fast pacing and dance rhythm, without great jumps in melody and dynamics and a major mode [24-26].

Music 2 is a 56 second musical piece from Adagio for Strings by Samuel Barber. A selection for sadness was made due to its very slow tempo characteristics, minor harmonies and a reasonable constancy in the melodic and dynamic scope [24-26].

By contrast, music 3, which is an excerpt with 57 seconds from Gustav Holst's Mars, the bringer of war, was selected to induce fear, and music 4, which is an excerpt with 47 seconds from The rite of Spring by Igor Stravinsky and was selected to induce anger, exhibit faster time with speed ups, harmonious or dissonant chords, rapid changes in dynamics, and great melodic contrasts, many of which are sudden and unexpected [24]. Although these characteristics are shared, the degree and manner in which the composers do it is distinct, differing fundamentally in the following: whereas the excerpt to induce fear presents distinctly differentiated sections in which to a slow and suspensive movement follows another much faster, using a lot of sharp contrasts, the same isn’t observed in the excerpt used for anger [24] (Figure 1).

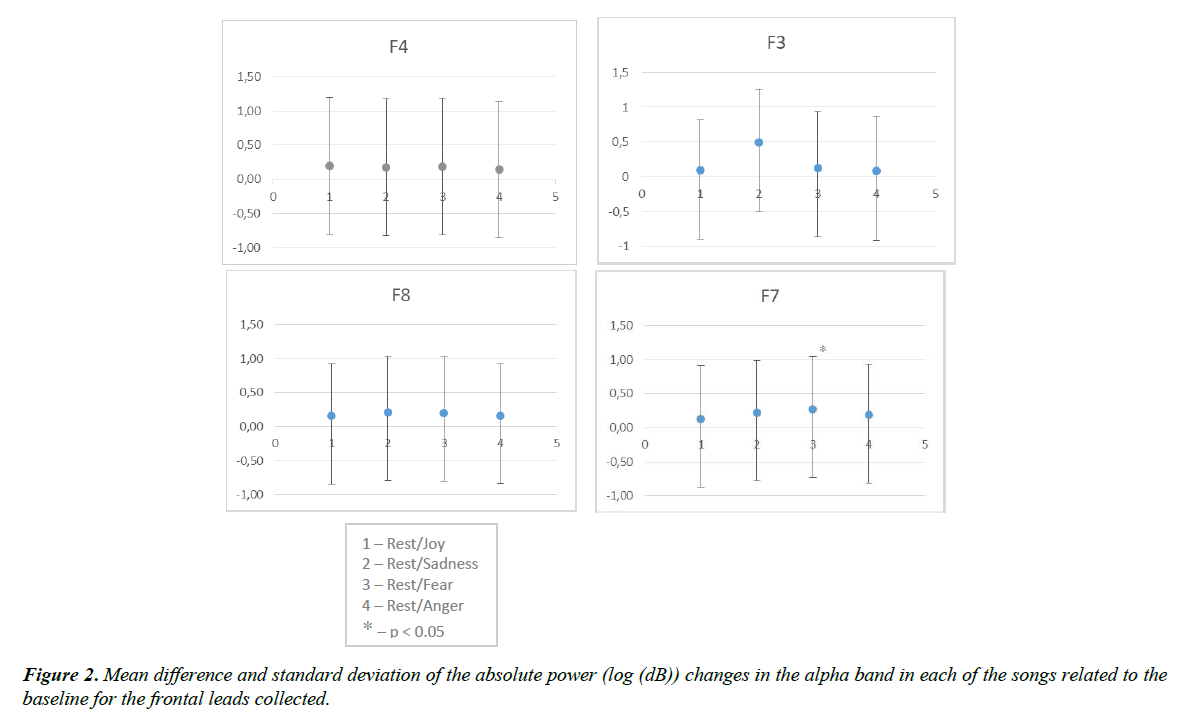

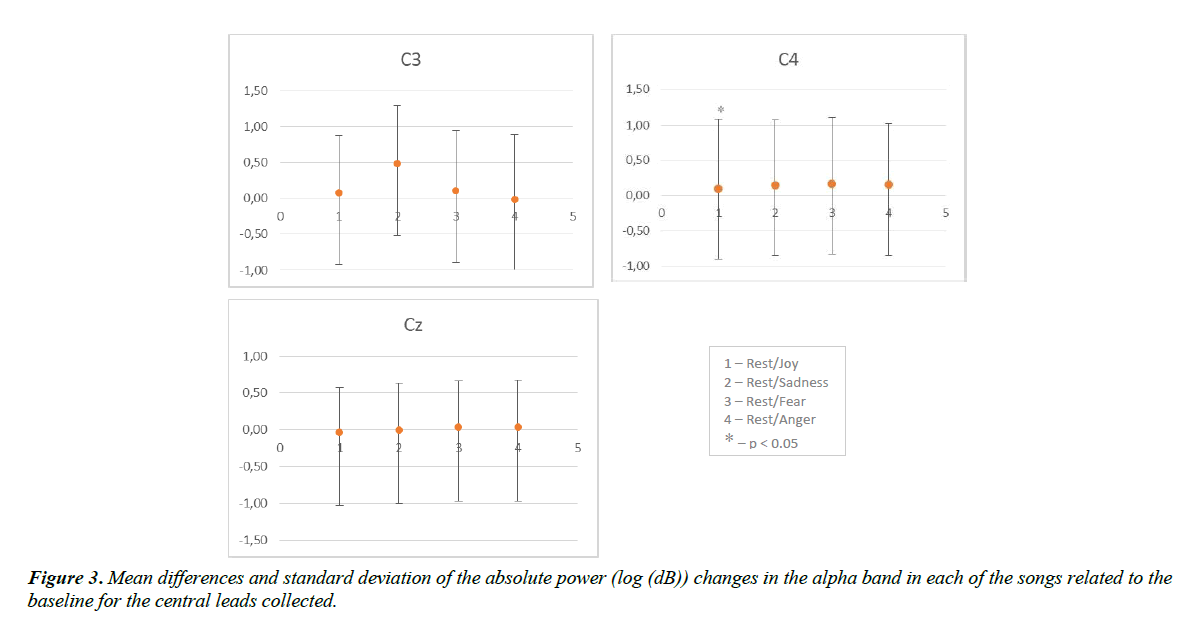

The analysis of the alpha band absolute power obtained during exposure to music in the various cortical locations was compared with the power in the alpha band obtained during rest, and is expressed in the following Figures 2 and 3-where we are already have the difference between baseline and each song. Concerning the activation pattern acquired with Music 1 (Joy), it wasn’t founded a significant effect in emotions but a significant effect was obtained in the interaction Channel- Emotion (F(12,348)=3.650; p<0.001; η2=0.112), with the differences located mainly in C4 (P=0.094) (Figure 3) and F7 (p=0.085) (Figure 2) channels, translating in a significant increase in alpha band power compared to the resting values. A significant effect was observed for the interaction Lobe-Emotion factor (F(3,87)=4.605; p<0.005; η2=0.137) with emphasis in alpha band activity reduction in the central lobe and an increase in parietal lobe. For the interaction Hemisphere-Emotion factor (F(1,29)=4.995; p=0.033; η2=0.147), with an equal activation of alpha band power in both hemispheres, and the left one showing a reduction of activity.

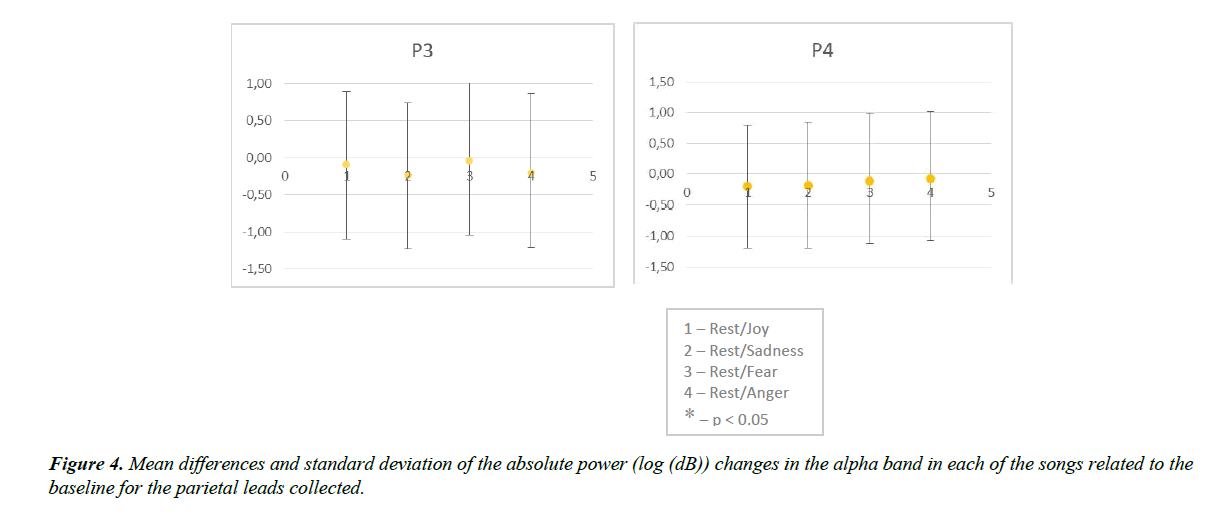

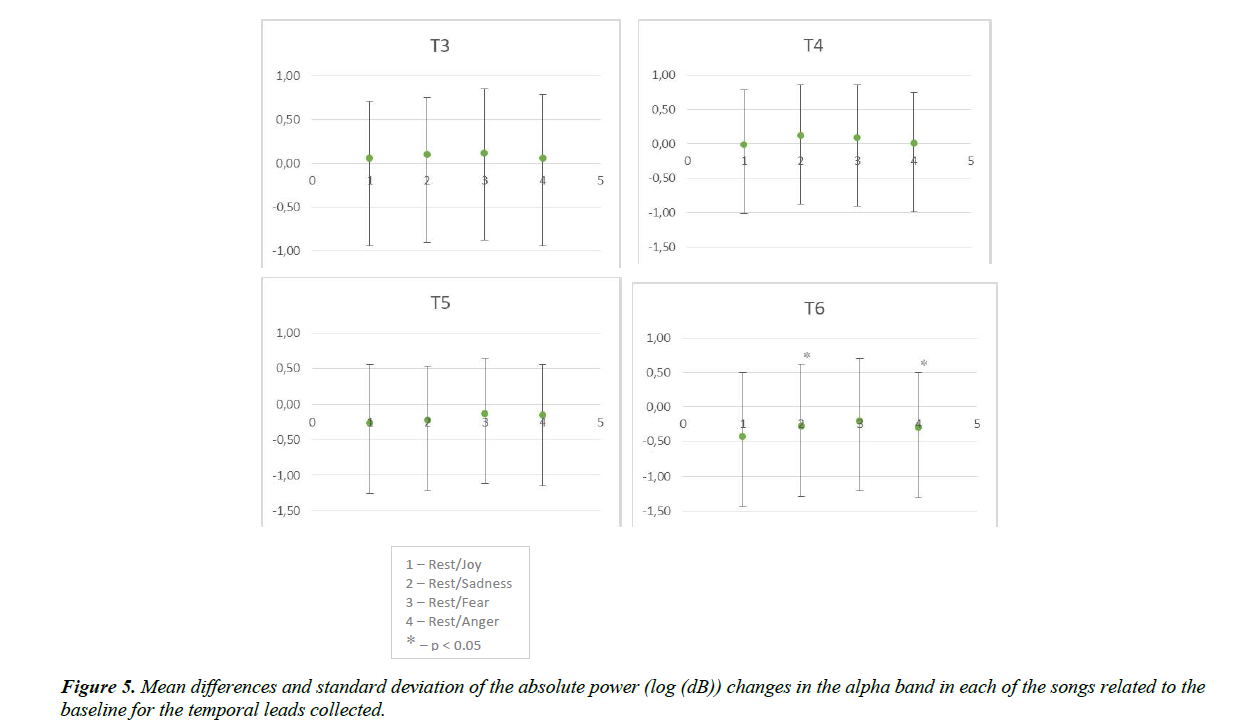

As for the cortical activation pattern for Music 2 (Sadness), compared to that obtained during the rest period, a significant effect in the interaction Channel-Emotion (F(12,348)=0.487; p<0.001; η2=0.127) was observed with a greater pronunciation in T6 (p=0.097) and a alfa band reduction in parietal and temporal (T5 and T6) channels (Figures 4 and 5). The analysis of the power in the aggregated alpha band related to the lobe allowed the identification of a significant effect for the interaction Lobe-Emotion factor (F(3,87)=6.312; p<0.001; η2=0.179). The interaction Hemisphere-Emotion factor (F(1,29)=0.828; p<0.028; η2=0.142) shows an alpha band activity increase in the right hemisphere and a reduction in the left one.

Regarding the results obtained for Music 3 (Fear), there was also a significant effect in the comparison with the resting activation pattern in the interaction Channel-Emotion (F(12,340)=2.804; p=0.001; η2=0.088), mainly in frontal channel F7 (p=0.068) (Figure 2), and the alpha band activity increased in the central channels and reduced in the parietal and temporal channels T5 and T6. A higher activation of the right hemisphere, particularly in the central lobe level, were also identified (interaction Lobe- Emotion factor-F(3,87)=3.324; p<0.023; η2=0.103; Hemisphere factor-F(1,29)=4.655; p=0.039, η2=0.138).

The analysis of alpha band power obtained during the exposure to Music 4 (Anger) revealed a significant effect in the interaction Channel-Emotion factor (F(12,348)=2.491; p=0.004; η2=0.0.079), with a significant increase of alpha band power in the temporal channel T6 (p=0.048) (Figure 5). The factor analysis that aimed at identifying differences in the brain lobes, identified a significant effect of the interaction Lobe- Emotion Factor (F(3.87)=2.742; p=0.048; η2=0.086) with na increase of alpha band activity in central lobe. It wasn’t found a significant effect between hemispheres but it was revealed an increase of alpha band activity in the left one.

Discussion

The aim of this study was to assess the influence of music listening on neurophysiological activations and emotions evoked. A great body of EEG studies on music perception has revealed a link between brain activation patterns and music-induced emotions. Plus, many EEG approaches identified divergent brain networks for positive and negative music affective valence [27].

In most revisions, an increased left frontal, temporal and parietal activation is accompanied by positive emotional music. This conclusion suggests some coherence between regions in the same hemisphere. In this study, the musical excerpt that transmitted positive valence emotions (joy) demonstrated asymmetry in the alpha band predominantly in the left central and parietal lobe, but with a global tendency to reduce the cortical alpha activation when compared to the resting values. Left hemisphere activation with pleasant musical feelings is consistent with reported activation of left fronto-temporal areas. Left hemisphere’s superiority has been established for the comprehension of temporal sounds [28], auditory sequences [29], interval regularities [30], and complex melodic strings [31].

In contrast, unpleasant emotions are associated with an increased coherent activity towards the right regions. Activation areas involving right cortical regions were found only for unpleasant emotions in most EEG readings [11,18,32,33]. In this study, both the musical excerpts that were chosen to induce sadness and fear revealed greater relative fronto-temporal activation in the right hemisphere. The difference between the two was the central lobe activation for the music that conveyed fear. The musical excerpt that was chosen to induce angor revealed an increase of alpha band activity in the left central lobe, differing from the other two negative emotions. These findings are in agreement with the considered literature, which always shows an increased frontal activity in the right hemisphere of the human brain associated to music that generates negative emotions [18,32,34,35].

The music that induced anger demonstrated greater activation of the left hemisphere (central lobe). These findings are in accordance with the approach-withdrawal model of emotion processing which states that emotions associated with approach behaviours are processed by left anterior brain regions and emotions associated with withdrawal behaviours are processed within right anterior brain regions [35]. Anger is a negative emotion that elicits an approach behaviour and it has been found to significantly correlate with a decreased left anterior cortical activity (which is associated with an increase in alpha activity) [36]. Harmon-Jones et al. [37] showed greater relative left midfrontal activity appeared when students were manipulated into an anger inducing situation and Harmon-Jones and Sigelman [37] also showed significant shifts to increased left prefrontal alpha activity following the induction of anger. Taking together all the studies that have been conducted on the laterality of anger processing, the evidence strongly suggests that this model is very important to understand the behavioural and experimental data.

The prefrontal cortex is a large brain region that covers most of the frontal lobes. The left prefrontal cortex subserves positive emotional functions during listening to light, happy and joyful music, whereas the right prefrontal cortex subserves negative emotional functions during aversive music presentations [11,38,39].

This study presented a couple of limitations. The first one is the reduced sample, only thirty participants, because the limited number of subjects makes these results preliminary and the outcome somewhat speculative before confirmation occurs. The second is the familiarity, which wasn’t measured. Plus, because the lines of this study did not permit several emotions to be present in one stimulus, although this is often experienced with music [40,41], the emotions expressed in the excerpts may not be the same as those felt by the listener [42], which allows us to speculate that some of the participants may have not felt the emotion intended, because some emotions in the context of music are rather complex and involve moods, personality traits and situational factors [43]. Thus, caution should be exercised when generalizing the results of this study because only four musical excerpts were used, one for each musical category. Further research is needed, with more participants and musical excerpts for each emotion, to allow greater evidence of EEG markers for the emotional valence of music.

Our study provides an attempt to delineate the neural substrates of music-induced emotions using a model with 4 emotional categories. The association between frontal alpha asymmetry and emotional valence was not significant, while the temporal asymmetry was. These findings are consistent with previous studies of music-listening [44]. Plus, the predominance of left hemisphere activation with pleasant musical feelings and right activation with unpleasant ones is consistent with findings that relate right frontal activation with negative affect and left frontal activation with positive affect [12].

Our investigation also suggests that these emotions are organized according to valence and arousal. Regarding the valence dimension, it has been suggested various times that the left hemisphere contributes to the processing of positive (approach) emotions, while the right-hemisphere counterparts are tangled in the processing of negative (withdrawal) affective states [11,45]. In line with this model, our results also suggest an association between emotions that provoke a behavioural approach (joy and anger) and the left-sided areas of the brain and between the emotions that cause withdrawal behaviour (sadness and fear) and the right side of the brain. About the arousal dimension, Heller [46] assumes that it is modulated by the right parietotemporal region, a brain region we also associated with music evoked arousal.

Conclusion

The link between brain dynamics and music-induced emotion has been explored by various brain imaging modalities, including functional magnetic resonance, electroencephalography (EEG) and positron emission tomography [7]. Music is a highly complex and precisely organized stimulus requiring different brain modules and systems involved in distinct cognitive tasks [27] and it is one of the most powerful elicitors of subjective emotion [44], but its ability to regulate emotions still needs to be further studied. The eagerness to want to know more and better leads to continuous research in this area. One of the goals of clarifying how music affects our brain is to be able to use it as a treatment for diseases such as Parkinson, Alzheimer and even Autism.

This article contributed to reinforce the relation that exists in the activation of the alpha band in the left hemisphere when hearing songs that trigger positive emotions and the right one in the negative emotions. Besides that also supports the approachwithdrawal model of emotion processing which states that emotions associated with approach behaviours are processed by left anterior brain regions and emotions associated with withdrawal behaviours are processed within right anterior brain regions.

There have been huge advances about the role of music in the relationship of the emotions with brain electrical activity, but we are far from reaching the crux of these mechanisms. So we must keep looking for answers and doing more investigation to evolve and be able to use music to our advantage and satisfy our curiosity. On behalf of future studies, it would be interesting to have a comparison between musicians and non-musicians as well as considering a wider number of EEG channels on display, aiming to validate cerebral hemisphere physiologic functionality and correlation with musical perception. Likewise, using neuroimaging techniques such as fNIR, as a way to complement and document changes in blood oxygenation and blood volume related to human brain function, would provide additional information into this question.

Disclosure

No conflict of interest to declare.

Financing

The present study was not financed by any entity.

References

- Kristeva R, Chakarov V, Schulte-Monting J, et al. Activation of cortical areas in music execution and imagining: a high-resolution EEG study. NeuroImage. 2003;20(3):1872-83.

- Popescu M, Otsuka A, Ioannides AA. Dynamics of brain activity in motor and frontal cortical areas during music listening: a magnetoencephalographic study. Neuroimage. 2004;21(4):1622-38.

- Khalfa S, Schon D, Anton JL, et al. Brain regions involved in the recognition of happiness and sadness in music. Neuroreport. 2005;16(18):1981-84.

- Phan KL, Wager T, Taylor SF, et al. Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. Neuroimage. 2002;16(2):331-48.

- Zilles K, Schlaug G, Matelli M, et al. Mapping of human and macaque sensorimotor areas by integrating architectonic, transmitter receptor, MRI and PET data. J Anat. 1995;187:515-37.

- Penhune VB, Zatorre RJ, MacDonald JD, et al. Interhemispheric anatomical differences in human primary auditory cortex: probabilistic mapping and volume measurement from magnetic resonance scans. Cereb Cortex. 1996;6(5):661-72.

- Amodio DM, Bartholow BD. Cognitive Methods in Social Psychology. Event-related potential methods in social cognition. Guilford Press, New York. 2011.

- Bonnel AM, Faita F, Peretz I, et al. Divided attention between lyrics and tunes of operatic songs: Evidence for independent processing. Percept Psychophys. 2001;63(7):1201-13.

- Braun J, Kästner P, Flaxenberg P, et al. Comparison of the clinical efficacy and safety of subcutaneous versus oral administration of methotrexate in patients with active rheumatoid arthritis: Results of a six-month, multicenter, randomized, double-blind, controlled, phase IV trial. Arthritis Rheum. 2008;58(1):73-81.

- Luu P, Tucker DM, Makeig S. Frontal midline theta and the error related negativity: Neuro physiological mechanisms of action regulation. Clin Neurophysiol. 2004;115(8):1821-35.

- Sutton SK, Davidson RJ. Prefrontal brain electrical asymmetry predicts the evaluation of affective stimuli. Neuropsychologia. 2000;38(13):1723-33.

- Davidson RJ. What does the prefrontal cortex “do” in affect: perspectives on frontal EEG asymmetry research. Biol Psychol. 2004;67(1-2):219-33.

- Craig AD. Forebrain emotional asymmetry: a neuroanatomical basis? Trends Cogn Sci. 2005;9(12):566-71.

- Hagemann D, Waldstein SR, Thayer JF. Central and autonomic nervous system integration in emotion. Brain Cogn. 2003;52(1):79-87.

- Allen JJ, Harmon-Jones E, Cavender JH. Manipulation of frontal EEG asymmetry through biofeedback alters self-reported emotional responses and facial EMG. Psychophysiology. 2001;38(4):685-93.

- Schmidt LA, Trainor LJ. Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions. Cogn Emot. 2001;15(4):487-500.

- Pizzagalli DA, Sherwood RJ, Henriques JB, et al. Frontal brain asymmetry and reward responsiveness: a source-localization study. Psychol Sci. 2005;16(10):805-13.

- Altenmuller E, Schurmann K, Lim VK, et al. Hits to the left, flops to the right: different emotions during listening to music are reflected in cortical lateralisation patterns. Neuropsychologia. 2002;40(13):2242-56.

- Mikutta C, Altorfer A, Strik W, et al. Emotions, arousal, and frontal alpha rhythm asymmetry during Beethoven’s 5th symphony. Brain Topogr. 2012;25(4):423-30.

- Park KS, Choi H, Lee KJ, et al. Emotion recognition based on the asymmetric left and right activation. Int J Med Med Sci. 2011;3(6):201-09.

- Lindquist KA, Wager TD, Kober H, et al. The brain basis of emotion: a meta-analytic review. Behav Brain Sci. 2012;35(3):121-43.

- Bruder GE, Tenke CE, Warner V, et al. Grandchildren at high and low risk for depression differin EEG measures of regional brain asymmetry. Biol Psychiatry. 2007; 62(11):1317-23.

- Stewart JL, Towers DN, Coan JA, et al. The oft-neglected role of parietal EEG

- asymmetry and risk for major depressive disorder. Psychophysiology. 2011;48(1):82-95.

- Arriaga P, Franco A, Campos P. Indução de emoções através de breves excertos musicais. Laboratório de Psicologia. 2010;8(1):3-20.

- Vink A. Living apart together: a relationship between music psychology and music therapy. Nord J Music Ther. 2001;10(2):144-58.

- Gomez P, Danuser B. Relationships between musical structure and psychophysiological measures of emotion. Emotion. 2007;7(2):377-87.

- Flores-Gutiérrez EO, Díaz FA, Barrios JL, et al. Metabolic and electric brain patterns during pleasant and unpleasant emotions induced by music masterpieces. Int J Psychophysiol. 2007;65(1):69-84.

- Ioannides AA, Popescu M, Otsuka A, et al. Magnetoencephalographic evidence of the interhemispheric asymmetry in echoic memory lifetime and its dependence on handedness and gender. NeuroImage. 2003;19(3):1061-75.

- Besson M, Schön D. Comparison between language and music. Ann NY Acad Sci. 2001;930(1):232-58.

- Samson RA, Visagie CM, Houbraken J, et al. Phylogeny, identification and nomenclature of the genus Aspergillus. Stud Mycol. 2014;78:141-173.

- Patel AD, Balaban E. Temporal patterns of human cortical activity reflect tone sequence structure. Nature. 2000;404(6773):80-84.

- Tsang CD, Trainor LJ, Santesso DL, et al. Frontal EEG responses as a function of affective musical features. Ann NY Acad Sci. 2001;930(1):439-42.

- Daly I, Malik A, Hwang F, et al. Neural correlates of emotional responses to music: An EEG study. Neurosci Lett. 2014;573:52-7.

- Trainor LJ, Schmidt LA. Processing emotions induced by music. The Cognitive Neuroscience of Music. Oxford University Press, New York. 2003.

- Heath AD, Erik E, Eric AY, et al. Brain Lateralization of Emotional Processing: Historical Roots and a Future Incorporating "Dominance". Behav Cogn Neurosci Rev. 2005;4(1):3-20.

- Buss AH, Perry M. The aggression questionnaire. J Pers Soc Psychol. 1992;63(3):452-59.

- Harmon-Jones E, Sigelman JD, Bohlig A, et al. Anger, coping, and frontal cortical activity: The effect of coping potential on anger-induced left-frontal activity. Cogn Emot. 2003;17(1):1-24.

- Eldar, E, Ganor O, Admon R., et al. Feeling the real world: limbic response to music depends on related content. Cereb Cortex. 2007;17(12)2828-40.

- Hou JC. Research about the cognitive neuroscience of the “Mozart Effect”. Chin J Spec Educ. 2007;3:85-91.

- Hunter PG, Schellenberg EG, Schimmack U. Mixed affective responses to music with conflicting cues. Cogn Emot. 2008;22(2):327-352.

- Barrett FS, Grimm KJ, Robins RW, et al. Music-evoked nostalgia: affect, memory, and personality. Emotion. 2010;10(3):390-403.

- Davidson, Richard J, Klaus R Sherer, et al. Handbook of affective sciences. Oxford University Press, New York. 2009.

- Vuoskoski JK, Thompson WF, McIlwain D, et al. Who enjoys listening to sad music and why? Music Perception. 2013;29(3):311-17.

- Baumgartner T, Esslen M, Jancke L. From emotion perception to emotion experience: Emotions evoked by pictures and classical music. Int J Psychophysiol. 2006;60(1):34-43.

- Heller W. Neuropsychological mechanisms of individual differences in emotion, personality, and arousal. Neuropsychology. 1993;7(4):476-89.