Research Article - Neurophysiology Research (2018) Neurophysiology Research (Special Issue 1-2018)

Brain processing and coding responses to musical expertise: An EEG study.

Vitória Silva, Telmo Pereira*, Jorge CondeDepartment of Clinical Physiology, Coimbra Health School, Polytechnic Institute of Coimbra, Coimbra, Portugal

- *Corresponding Author:

- Telmo Pereira

Department of Clinical Physiology

Polytechnic Institute of Coimbra Coimbra

Portugal

Tel: (+351) 965258956

E-mail: telmo@estescoimbra.pt

Accepted date: February 19, 2018

Citation: Silva V, Pereira T, Conde J. Brain processing and coding responses to musical expertise: An EEG study. Neurophysiol Res. 2018;1(1);23-31

Abstract

Introduction: The neuroscience of music is the scientific study of brain-based mechanisms involved in the cognitive processes underlying music. Art is a product of human creativity. It is a superior skill that can be learned by study, practice and observation. The connection between brain and music is robust and bidirectional. Objectives: To understand the changes in cerebral activity produced by learning part of musical theory - the melodic intervals. Methods: Fifteen non-musicians, without any academic theoretical musical knowledge and, mean ages of 20.40 ± 1.96 years, were selected to participate voluntarily in this observational and prospective study. Using the electroencephalogram (EEG) as the acquiring method, cerebral activity was analyzed at two different moments, baseline and after a 5 day training period. The EEG task corresponded to recognize and identify the melodic intervals in display. Results: Behavioral responses exposed an improvement on reaction time factor and accuracy for the second exam answers of 49.83 ± 9.72 and 3.01 ± 1.53 seconds respectively, against the 40.67 ± 9.24 and 4.29 ± 1.98 seconds of the first evaluation. EEG frequencies outcomes have been underlined in alpha, beta and gamma absolute power frequency bands and in alpha-frequency relative band of power when basal and after-training replies were compared. The results shown a bilateral collaboration of the different regions of the brain and both cerebral hemispheres in physiological functions related with the learning process of music. Conclusions: Cerebral activation, activity and frequencies in non-musicians which have acquired some theoretical musical knowledge exhibit visible differences in-between evaluations. The human brain is very complex in the way it perceives information that it receives through sensory input. The two cerebral hemispheres perform distinct, however in harmony, functions.

Keywords

Electroencephalogram, Cerebral activation, Frequency-power, Music, Melodic intervals

Introduction

The brain, being the most complex and unknown organ of the whole human system, becomes a structure of difficult discretion. However, it is possible to condense some information about its architecture and functioning referring to distinguished researchers of neuroanatomy, neuroscience, neurophysiology, and neuropsychology.

Much of what we know or think about the brain rests on an assumption called the localization hypothesis: the brain is not just a mass of undifferentiated tissue, but rather, specific neural regions underline distinctive behaviors, thoughts, and actions. This is reflected in the names we give to the brain regions, such as motor cortex (for movement), sensory cortex (for processing information for the five senses), auditory cortex, and visual cortex. Evidence for regional specialization comes from case studies of patients with lesions, and from various neuroimaging technologies (Positron Emission Tomography or PET, functional Magnetic Resonance Imaging or fMRI, Magnetoencephalography or MEG, Electroencephalography or EEG), as well as animal studies [1].

The electroencephalogram (EEG) is a recording of cerebral electrical potentials by electrodes on the scalp. Cerebral electrical activity includes action potentials that are brief and produce circumscribed electrical fields, and slower, more widespread, postsynaptic potentials. Thus the EEG is a spatiotemporal average of synchronous postsynaptic potentials arising in radially oriented pyramidal cells in cortical gyri over the cerebral convexity [2].

Alpha waves (8-13 Hz) generally are seen in all age groups but are most common in adults. They occur rhythmically on both sides of the head but are often slightly higher in amplitude on the non-dominant side, especially in right-handed individuals. A normal alpha variant is noted when a harmonic of alpha frequency occurs in the posterior head regions. They tend to be present posteriorly more than anteriorly and are especially prominent with closed eyes and with relaxation [3]. Alphaband oscillations have two roles (inhibition and timing) that are closely linked to two fundamental functions of attention (suppression and selection), which enable controlled knowledge access and semantic orientation (the ability to be consciously oriented in time, space, and context). As such, alpha-band oscillations reflect one of the most basic cognitive processes and can also be shown to play a key role in the coalescence of brain activity in different frequencies [4].

Oscillatory neural activity in the frequency range of 14-30 Hz occurring at both pre- and postcentral cortical sites is known as the beta rhythm [5]. Drugs, such as barbiturates and benzodiazepines, augment beta waves [3]. In daily life, complex events are perceived in a causal manner, suggesting that the brain relies on predictive processes to model them. Within predictive coding theory, oscillatory beta-band activity has been linked to top-down predictive signals, sent from the higher abstract knowledge areas to motion perception areas via intermediate integration areas, while bottom-up prediction error signals flow in the opposite direction, following the principles of predictive coding [6].

Theta lies between about 4 to 7.5 Hz. The amount of EEG power in the theta and alpha frequency range is indeed related to cognitive and memory performance in particular, if a double dissociation between absolute and event-related changes in alpha and theta power is taken in account: small theta power but large alpha power (particularly in the frequency range of the upper alpha band) indicates good performance, whereas the opposite holds true for event-related changes, where a large increase in theta power (synchronization) but a large decrease in alpha power (desynchronization) reflect good cognitive and memory performance in particular [7].

Slow waves or Delta have a frequency of 3 Hz or less. They normally are seen in deep sleep in adults as well as in infants and children. Delta waves are abnormal in the awaken adult. Often, they have the largest amplitude of all waves and can be focal (local pathology) or diffuse (generalized dysfunction) [3]. Since sleep is associated with memory consolidation, delta frequencies play a core role in the formation and internal arrangement of biographic memory as well as acquired skills and learned information [8].

Gamma frequency (>40 Hz) activity has been linked to a variety of perceptual and cognitive functions such as feature binding, selective attention [9].

The meaning of music has of late been the subject of much confused argument and controversy. The controversy has stemmed largely from disagreements as to what music communicates, while the confusion has resulted for the most part from a lack of clarity as to the nature and definition of meaning itself [10]. Music consists of six fundamental attributes: pitch, duration, loudness, timbre, spatial location, and reverberant environment. A set of two or more pitches gives rise to contour and melody, and a set of two or more durations gives rise to rhythm. Thus melody and rhythm are secondary aspects of the fundamental attributes [1].

Similar to reading words, humans also learn to read music, and reading of music notation also requires audiovisual (AV) integration, in the form of written notes with musical sounds. However, compared to word reading, music reading offers a unique opportunity to study the mechanisms of learned AV integration, as identical AV stimuli can be presented to musicians, who have learned to integrate the stimuli, and nonmusicians, who have not [11].

Musical knowledge and skillfulness vary greatly across the population. This provides a basis for the study of how individual differences are reflected in brain activity during perceptive and cognitive processes. Not surprisingly, musical competence facilitates both sensory memory and conscious cognitive processing of musical sounds, reflected in enhanced brain activity [12].

Our motor and auditory systems are functionally connected during musical performance, and functional imaging suggests that the association is strong enough that passive music listening can engage the motor system [13]. The minds of the performer and the listener handle an extraordinary variety of domains, some sequentially and some simultaneously. These include domains of musical structure relating to pitch, time, timbre, gesture, rhythm, and meter. They also include domains that are not fundamentally domains of musical structure, such as affect and motion. Some aspects of these domains are available to consciousness and some are not [14].

Both brain hemispheres are needed for complete music experience, while frontal cortex has a significant role in rhythm and melody perception. The centers for perceiving pitch and certain aspects of melody and harmony and rhythm are identified in the right hemisphere. Left hemisphere is important for processing rapid changes in frequency and intensity of tune. Several brain imaging studies have reported activation of many other cortical areas beside auditory cortex during listening to music, which can explain the impact of listening to music on emotions, cognitive and motor processes [15].

Potes et al. results identified stimulus-related modulations in the alpha (8-12 Hz) and high gamma (70-110 Hz) bands at neuroanatomical locations implicated in auditory processing. Specifically, stimulus-related electrocorticography (ECoG) modulations in the alpha band in areas adjacent to primary auditory cortex, which are known to receive afferent auditory projections from the thalamus. In contrast, stimulus-related ECoG modulations in the high gamma band not only in areas close to primary auditory cortex but also in other perisylvian areas known to be involved in higher-order auditory processing, and in superior premotor cortex [16].

There is increasing evidence across sensory systems, using a range of tasks, that predictive timing involves interactions between sensory and motor systems, and that these interactions are accomplished by oscillatory behavior of neuronal circuits across a number of frequency bands, particularly the delta (1-3 Hz) and beta (15-30 Hz) bands. In general, oscillatory behavior is thought to reflect communication between different brain regions and, in particular, influences of endogenous ‘topdown’ processes of attention and expectation on perception and response selection [17]. Additionally, beta rhythms appear to entrain to rhythmic sounds in auditory cortices and also exhibit similar patterns of suppression and enhancement for both listening and tapping over motor areas [13].

Psychophysical studies have shown that musicians have lower perceptual thresholds than non-musicians for frequency and temporal changes. These differences might be underpinned by functional and/or structural differences in the auditory neural circuitry. It is now well established that musical practice induces functional changes as reflected by cortical and sub-cortical electrophysiological responses to auditory stimuli [18].

With this study we intended to scrutinize and compare the cerebral activity in non-musicians previous and afterwards theoretical bases of music, the melodic intervals, learnt. EEG exam was used for the effect in two different moments of assessment. Examinations were made on the first and fifth day and, composed by a brief preparation assignment, followed by a melodic intervals recognition and identification task. Inbetween the investigation days, an online home-training period of fifteen minutes, per day, was realized by the participants.

It is expected to find functional physiological differences concerning cerebral activity and activation when EEG-frequency bands analyzed and both exams associated. Neural processes track musical progressions and respond to violations of melodic or harmonic expectations with similar latency as semantic violations in language. This suggests that we might process music as a hierarchically organized sequence of information, similar to language and motor programs [13]. We also projected to found the relation between brain hemispheres or a lateral dominance involving the process of musical art education. Since music is analogous to language without involving speech directly, it has generally been considered a right hemisphere skill. Musical skills, however, form a mixed set, some of which require left hemisphere integrity, and some of which require right hemisphere functioning [19]. David Jr. research concludes that the greatest possible human potential, can only be realized by the use of both sides of the brain.

Methods

Study design and population

The present observational and prospective study envisioned to evaluate cerebral activity before and after, learning part of musical theory, the melodic intervals. Only healthy and musictheory naïve individuals were included, with ages ranging from 18 and 25 years. Participants with former musical training, earing problems, neurological or psychiatric disorders or under pharmacological therapy were excluded. Fifteen people were selected to participate, constituting the study population, with a mean age of 20.40 ± 1.96 years.

This study was designed in conformity with the WMA Declaration of Helsinki - Ethical Principles for Medical Research Involving Human Subjects [20]. All data collected was treated with confidentiality and anonymity, and there were no conflicts of interest or financial counterparts. It has been guaranteed that the interest of the research is purely academic, and will have no commercial or profit-making interests.

EEG equipment

In both experiment tests, the MP150 platform with EEG100C Amplifiers running through the AcqKnowledge 4.4 software was used (BIOPAC® Systems, Inc. USA). EEG was recorded from 16 passive electrodes positioned at F3, C3, T3, P3, O1, Fpz, Fz, Cz, Pz, F4, C4, T4, P4, O2, T5 and T6, according to the international 10-20 system, mounted in an adult nylon electrode cap system (CAP100C, BIOPAC® Systems, Inc. USA). The minimum requirements for performing this examination were set onward in the guidelines of the American Clinical Neurophysiology Society [21]. EEG signals were referenced to the earlobes, filtered using a 0.5 Hz high pass filter and a 35 Hz low pass filter, and a 50 Hz notch filter. Impedances were checked with an electrode impedance checker (EL-CHECK, BIOPAC® Systems, Inc. USA), and kept below 5 kΩ.

Stimuli

For the study structuration it was necessary to choose the applying melodic intervals which would be used for stimulation. Four of the twelve existing types have been arbitrarily defined as the easiest ones for teaching and identifying. The selected ones were the Major Second (M2), Major Third (M3), Perfect Fourth (P4) and Perfect Octave intervals (P8).

Ten different clips of each of the refereed intervals were carefully composed, resulting on forty experimental stimuli. The first step was playing and making an audio recording of each group. The program used for playing the tones was Everyone Piano (Copyright © 2010-2020), recorded in-between the octave 3 (C3-B3) and the octave 5 (C5-B5) of the virtual piano. Then the sounds were, once again, recorded using Microsoft Corporation Sound Recorder, version 10.1705.1302.0 to convert the files to WAV audio format, in order to make the clips compatible with the experience presentation software (SuperLab 4.5, Cedrus® Corporation, USA). This software was used to manage all aspects of stimuli presentation, participant responses (dedicated response pad), and behavioral responses collection. The sound clips were presented randomly.

Procedure

The experimental procedure was performed at two different moments (baseline and after a training period of 5 days), always at approximately the same hour of the day for each participant. On the first day (baseline evaluation), all participants were informed about the objectives of the study and the overall procedure, and an individual signed informed consent was obtained. A questionnaire was individually filled, to retrieve demographic and clinical information. Also, the Portuguese version of the POMS (Profile of Mood States) questionnaire was required to compare emotional states between examinations [22]. The EEG equipment was than connected to the participants, who sat in a recliner in a dimly lit and quite room. The head circumference was previously measured to ensure the adequacy of the EEG cap and the precision of the EEG lead positioning. A basal thirty seconds EEG acquisition was made to verify the system operation and set the baseline guide for the entire EEG sampling. Then, a training session was initiated, containing a brief musical intervals tutorial, relating each melodic interval to fragments of four popular songs that were presented as a way of recognizing, interiorizing and learning the tones of the melodic intervals prior the specific test. No EEG was collected at this stage. After this training period, the experimental session began, and all stimuli were randomly presented in one single block. Each time epoch included a 200 ms baseline period and a 10 s presentation window, in which EEG was recorded. After each epoch, the participant was asked to identify the presented interval and the responses were given in a dedicated response pad and automatically recorded by the Superlab Software. A 4 s inter-stimuli period was also programmed. Since and including day one, the participants performed a 5-day training program for the melodic intervals recognition, on an online platform (http://www.teoria.com/loc/pt/), according to a pre-defined and uniform structure. Each training session had duration of 15 minutes, and was performed individually at home. To ensure the compliance to the training routine, each session was remotely monitored by one of the investigators through a web-camera mounted on each participant personal computer. In the day after the last day of the training period, the participants were again evaluated at the lab, and the overall procedure was repeated, following the same structure. All the data was compiled in a digital datasheet for subsequent statistical analysis.

All aspects of presentation were managed with the SuperLab 4.5 (Cedrus® Corporation, USA), which also triggered the recording of EEG data through a StimTracker (Cedrus® Corporation, USA) connected to the MP150 platform (BIOPAC® Systems, Inc., USA).

EEG data processing

Offline EEG data was processed in EEGLAB v. 14.0.0b toolbox, used by MatLab 8.5 R2015a custom program to effectuate the EEG analysis. The EEG data were saved as EDF files, and then imported to the EEGLAB platform. Data were extracted and analyzed at 256 Hz sampling rate. Forty epochs were extracted for each EEG file, in-between -200ms pre-stimuli and 10s after stimuli presentation onset. EEG was re-referenced offline to the average. ICA analysis was performed allowing for the removal of non-brain-related components from the raw data, namely eye-related components, artifact components, muscle components, or others meeting the three criteria: (1) irregular occurrence throughout the session, (2) scalp location indicating facial muscles, and (3) presence of abnormal spectrogram, such as extremely high-power low frequencies (eye blinks) or disproportionately large power from 20 to 30 Hz (muscle contamination) [23]. Fpz channel was excluded from analysis due to poor scalp contact and high artifact verification. After artifacts were removed, EEG signals were remixed from source space back into channel space for further analysis. Afterwards, power of EEG frequency bands during the 10 s window after the onset of the stimuli was obtained, following the spectopo function, and all values were exported to a Microsoft Excel Worksheet for subsequent SPSS analysis. The raw EEG power values (in dB) were log-transformed to normalize the distribution. The frequency intervals designated for each band analysis were: 1-3 Hz for Delta, 4-7.5 Hz for Theta, 8-13 Hz for Alpha, 14-30 Hz for Beta and >30 Hz for Gamma band.

Statistical analysis

The statistical analysis was performed with the SPSS software, version 18 for Windows (IBM, USA). A simple descriptive analysis was used to characterize the study population and to evaluate the distribution of the continuous variables. The continuous variables are represented as mean ± standard deviation. Repeated-measures ANOVAs were conducted on the various measures considered. Factorial analysis was performed taking the factor Moment (two levels: baseline and Post-training) and the factor EEG Channel (sixteen levels, corresponding to the EEG channels). The Greenhouse-Geisser correction was used when sphericity was violated, and the Bonferroni adjustment was adopted for multiple comparisons designed to locate the significant effects of a factor. The criterion for statistical significance was p ≤ 0.05, and a criterion of p between 0.1 and 0.05 was adopted as indicative of marginal effects.

Results

Study population characteristics

The present study included fifteen participants, 10 females, with a mean age of 20.40 ± 1.96 years, naïve to musical theory. Table 1 displays the overall characteristics of the study population. The mean body mass index (BMI) was 22.48 ± 3.82 kg/m², majorly right handed, with absence of any chronic medication other than the contraceptive pill (in 4 girls). All participants had normal or corrected to normal vision, and no auditory problems were referred. In terms of the POMS questionnaire analysis a total score, calculated from six dimensions (Tension, Depression, Hostility, Fatigue, Confusion and Vitality), of 101.13 ± 28.21 and 95.9 ± 26.9 was obtained for the first and second moments, respectively, showing an inexistence of humor-state perturbations between examinations. Concerning each dimension individually, similar outcomes were exposed without statistical significance.

Table 1. Characteristics of the study population included for analysis BMI-body mass index.

| Total(n=15) | Male (n=5) | Female (n=10) | |

|---|---|---|---|

| Age, years | 20.40 ± 1.96 | 22.00 ± 2.00 | 19.60 ± 1.43 |

| BMI, Kg/m² | 22.48 ± 3.82 | 21.80 ± 3.95 | 22.69 ± 4.15 |

| Dexterity | |||

| Right-handed, n (%) | 14 (93.3) | 4 (80) | 10 (100) |

| Left-handed, n (%) | 1 (6.7) | 1 (20) | 0 (0) |

| Visiual Problems | |||

| Yes, n (%) | 8 (53.3) | 2 (40) | 6 (60) |

| No, n (%) | 7 (46.7) | 3 (60) | 4 (40) |

| Audition Problems | |||

| Yes, n (%) | 0 (0) | 0 (0) | 0 (0) |

| No, n (%) | 15 (100) | 5 (100) | 10 (100) |

| Musical Training | |||

| Yes, n (%) | 0 (0) | 0 (0) | 0 (0) |

| No, n (%) | 15 (100) | 5 (100) | 10 (100) |

Behavioral responses

The accuracy significantly improved from the baseline to the post-training evaluation, increasing from 40.67 ± 9.24% to 49.83 ± 9.72% (p<0.05). Also, the absolute number of correct answers increased significantly (mean of 16.27 ± 3.70 in baseline to 19.93 ± 3.89 after training; p<0.05) and the absolute number of errors conversely decreased from 23.73 ± 3.70 at baseline to 20.07 ± 3.89 after training (p<0.05). Furthermore, the time each participant took to judge the stimuli was reduced after the training period (4.29 ± 1.98 seconds at baseline versus 3.01 ± 1.53 seconds after training; p<0.05).

Absolute power EEG frequencies analysis

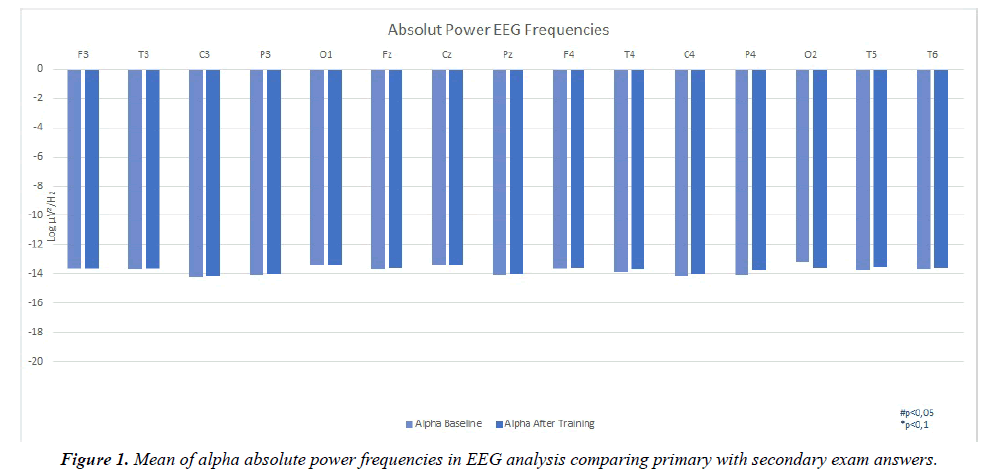

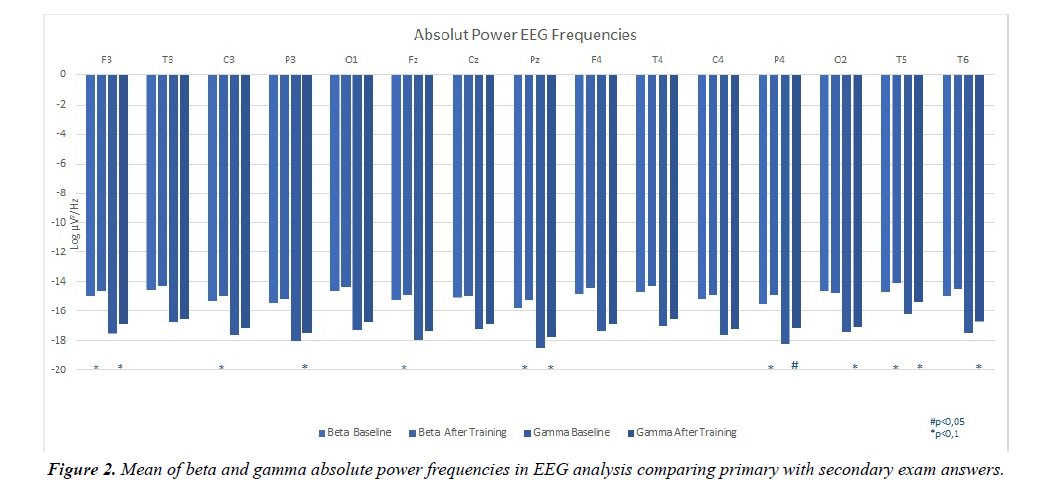

Figures 1 and 2 resume the main findings in terms of absolute power frequencies analyzed within each channel used in both evaluations: baseline and after training.

A global view of the results for the absolute values, revealed a trend for a differentiation between moments. Concerning the alpha band, a trend for a reduction in the alpha activation in the post-training evaluation as compared with the baseline evaluation was depicted.

Beta absolute band as revealed a marginal significant effect (F(1,14)=3.267; p=0.09; η²=0.190), with the decreasing differences mainly localized on F3 (p=0.040), P4 (p=0.011), T5 (p=0.018) and Pz (p=0.030), and marginal differences also localized on C3 (p=0.099) and Fz (p=0.086).

Marginal significant effects were also verified for the absolute Gamma power (F(1,14)=3.644; p=0.077; η²=0.207;), with the differences located on F3 (p=0.042), T5 (p=0.028) and P4 (p=0.002), and also a trend for differences in T6 (p=0.085), Pz (p=0.061) and P3 (p=0.064) were found, all with a declining outline for the after training evaluation.

When comparing the baseline versus post-training patterns of cortical spectral Beta power for the epochs corresponding to the correct identification of the musical intervals, significant effects were found for the factor Moment (F(1, 14)=3.573; p=0.08; η²=0.203) as well as an interaction between the factors Moment and EEG channel (F(14, 196)=2.357; p=0.005; η²=0.144). Main differences were located on P4 (p=0.001), Pz (p=0.019) and T5 (p=0.002), with marginal differences also seen on F3 (p=0.057), C3 (p=0.080), Fz (p=0.091) and T6 (p=0.078). Considering the Gamma band, significant effect for the factor Moment was also in line (F(1, 14)=3.63; p=0.077; η²=0.206), with differences located on P4 (p=0.001), F3 (p=0.05), P3 (p=0.039) and T5 (p=0.012), and marginal differences located on C3 (p=0.066), and Pz (p=0.075). All results presented a reduction of activation for de second moment of analysis. No further significant effects were found.

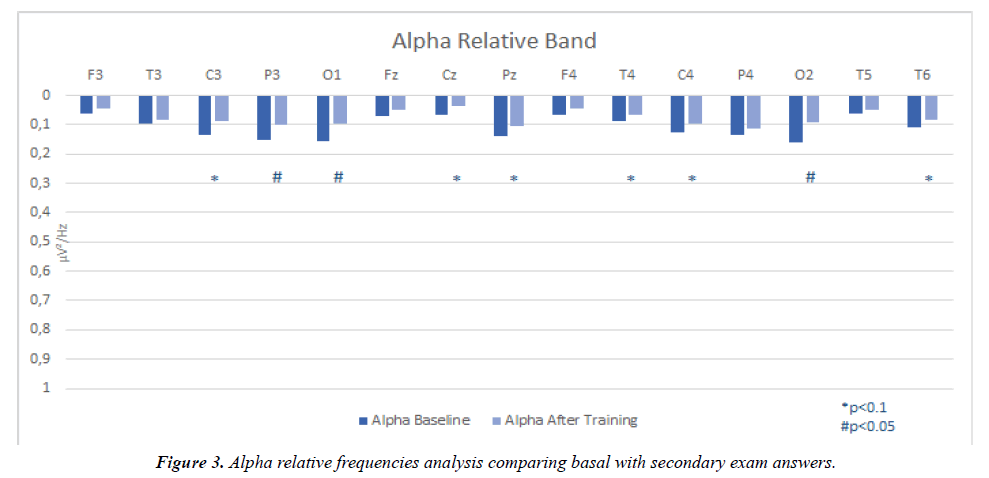

Relative EEG power frequencies analysis

The results concerning relative alpha band power depicted a clear differentiation in the pattern of cortical activation between sessions, with lower values observed in all channels post-training. Significant effect was obtained for the factor Moment (F(1,14)=8.292; p=0.012; η²=0.372) with a significant Moment*EEG channel interaction (F(14, 196)=2.343; p=0.005; η²=0.143). The effects were mainly located on C3 (p=0.014), Cz (p=0.035), P3 (p=0.002), O1 (p=0.007) and O2 (p=0.005), with marginal differences seen in T4 (p=0.085), C4 (p=0.066), T6 (p=0.082) and Pz (p=0.075). Similar results were obtained when considering the epoch corresponding to correct responses, with significant effect for the factor Moment (F(1, 14)=7.911; p=0.014; η²=0.361) and a significant Moment*EEG channel interaction (F(14, 196)=2.695; p=0.001; η²=0.161). Main differences were located on P3 (p=0.008), C3 (p=0.034), O1 (p=0,002), O2 (p=0,001), and marginally on T4 (p=0.060).

No other marginal or significate values were found on statistical analysis of the EEG channels (Figure 3).

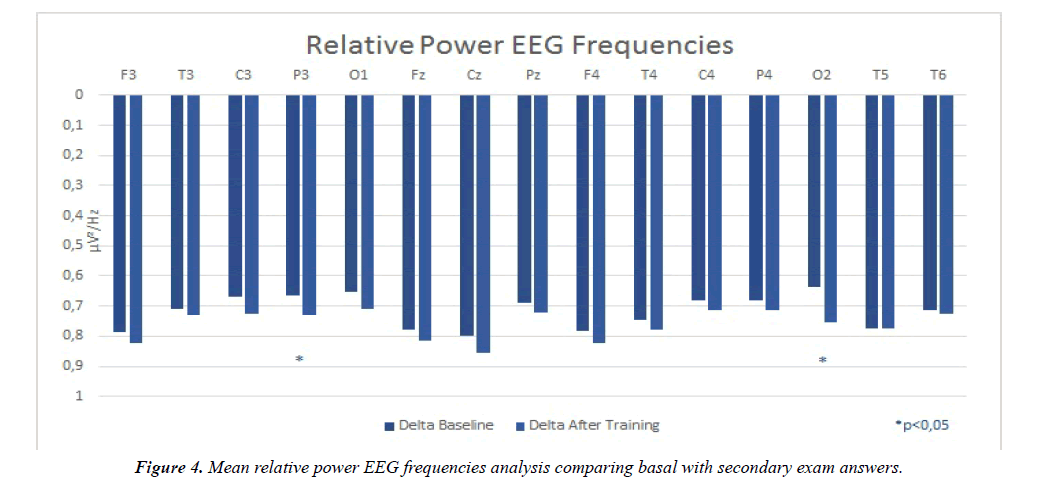

Figure 4 resumes the main findings in terms of relative frequencies analyzed within each channel used in both evaluations. Significant effects were observed for the Delta power relative frequency (F(14, 196)=1.897; p=0.029; η²=0.119), on P3 (p=0.034) and O2 (p=0.007). All values refereed draught an increasing tendency in the post-training evaluation. No other marginal or significate values were found on statistical analysis of the EEG channels.

Discussion

The present observational and prospective study intended to evaluate cerebral activity before and after learning part of musical theory-the melodic intervals-as evidenced by changes in the EEG patterns. The main investigation prediction, that was to demonstrate that different patterns of functional connectivity and cerebral activation would be associated with the learning process, was supported by our results. Other feedback expected was to correlate the physiological functions of both cerebral hemispheres in the process of music learning.

Of all absolute frequencies analyzed (Delta, Theta, Alpha, Beta and Gamma), Alpha, Beta and Gamma were the ones that exhibited the most significate functional cerebral activation effects. Even though no statistically significance was observed regarding the Alpha absolute frequency band, a consistent and widespread trend towards an increase in alpha wave power was depicted on the post-training evaluation, as portrayed in Figure 1. Cortical fluctuations between 8-13 Hz (alpha frequency band) are perhaps the most studied brain oscillations since the early days of electrophysiological recordings. Studies of the primary sensory-motor cortices have suggested that alpha-band, oscillations decrease with attention and movement. There is also evidence that inhibitory alpha power precedes the involvement of cortical regions in task-related functions [24]. This increased potency of Alpha-band frequency after-training, can be explained by a lower number of neurons recruited, exhibiting a reduced cortical activation. On the other hand, it can also mean a higher recruitment efficiency of neurons concerning the cognitive resources, once the recognition and identification of the musical intervals became easier by home-training.

The difference between absolute and relative power can easily be explained when considering the fact that relative power measurements tend to give larger estimates for the dominant frequency range [7]. Relative alpha-frequency band outcomes (Figure 4) exposed a visible and marked reduction in the relative contribution of the alpha-band for the total power spectrum, contrasting with the increase depicted for the absolute values of the alpha power, meaning that a trade-off occurred with other frequency bands, for which an increase in their relative contribution for the total power superimposed the absolute alpha band trends. This pattern of lower cerebral activation at the second moment may thus reflect a re-modulation of neurons recruited for the assignment after the training sessions. From a functional perspective, there is substantial evidence that brain oscillations in the alpha band might reflect neural interactions between the thalamus and the cortex. This inflection of the cortex, reflected by augmentation or suppression of the power in the alpha band, might be the mechanism that facilitates transfer of information to task-related brain areas by inhibiting neural activity in task-unrelated areas [16]. Marginal effects were denoted at central regions of both cerebral hemispheres. Moreover, the occipital areas have also exposed significant effects bilaterally. This could mean a greater neuron oscillation and integration of this cerebral areas relating the task in evaluation, as well as the correlation between hemispheres. As described before, oscillations from alpha-band perform a fundamental role in brain general functional activity [4].

In most people, the left side of the brain deals with logic, language, reasoning, number, linearity, and analysis. The right side of the brain deals with images and imagination, daydreaming, color, parallel processing, face recognition, pattern or map recognition, and the perception of music [19]. Other studies findings suggest that in the earlier stages of cortical neural processing, the right hemisphere is more active in musicians for detecting pitch changes in voice feedback. In the later stages, the left-hemisphere is more active during the processing of auditory feedback for vocal motor control and seems to involve specialized mechanisms that facilitate pitch processing [25]. The right temporal side of the participants brain revealed relevant alpha-frequency that could suggest that this side is further interrelated with music. However, research has shown that neither cerebral hemisphere is totally "dominant" for music, but rather that both hemispheres are involved [19].

Finally, the associations between correct answers have shown, in Moment and EEG*Moment analysis, statically significant values in P3 channel and in the occipital channels, once again. The marginal values exposed at both factors and comparisons, revealed central, temporal, parietal and occipital cerebral distribution, in a nonlinear way, exhibiting a bilateral hemisphere correlation.

Beta absolute power frequency band analysis revealed a cerebral extensive group of marginal values when comparing both evaluations. Fujioka et al. research regarding Beta band oscillatory activities demonstrated that eventrelated desynchronization in the auditory cortex is related to hierarchical and internalized timing information and widespread across the brain, including sensorimotor and premotor cortices, parietal lobe, and cerebellum, having an important role in neural processing of predictive timing [26]. Beta frequency band desynchronizes in anticipation of actions, hearing, and seeing that same action, followed by rebound synchronization after completion of the event [13]. When correct answers were compared, other marginal values were found within Moment and EEG*Moment factors, but most importantly, there was a statistically significance underlined in P4 and T5 channels, remitting for a relation between right parietal and left temporal contralateral lobes.

There is dissociation in the directionality of beta and gamma connectivity’s within the causal inference network and a consistency of power modulations with a predictive codingbased modeling of causal aspects of actions. Meaning that focusing attention on a particular stimulus or on a particular modality increases the synchrony of responses in the neuronal networks that process the attended stimulus [6,27]. Gamma power frequencies have shown another wide distribution across the cerebral area, underlining, once more, the parietal and temporal regions. This is in concordance with beta-band values. It is stated that the anticipation of a particular stimulus or a motor act is associated with the generation of oscillatory activity in beta and gamma-frequency bands in cortical areas required for the processing of the stimulus or the execution of the task [27]. The right parietal lobe (P4) had an important role when participant’s correct responses were associated, not undermining the left hemisphere, which showed a significant extended stimulation in gamma-frequency. The primary neural source of the early gamma oscillations has been found in the auditory cortex, where modulations reflet an essential component in perceptual processing or phase-locked sensory phenomenon, disposed to diffrent attention levels, important for preparing the brain for subsequent processing [9].

Delta-frequency band revealed significant values at the right occipital lobe area (O2) when general analysis was made and after incorrect answers of both exams compared. Marginal results were found widespread on the scalp at the same conditions. Neuronal networks can display different states of synchrony, with oscillations at different frequencies. Relaxing awaken activities frequencies are common all over the visual cortex and corresponding thalamic nucleus, the sources of delta are typically localized in the thalamus and stronger in the right brain hemisphere [28].

Taken in light the previous findings, it is possible to corroborate our predictions. There is indeed, a differentiation and variation of functional connectivity in cerebral activation when a new subject is learnt by an individual. Evidence is in consonance with previous researches, a general spectrum of increased EEG frequencies within each band at the after-training moment, (except for relative delta-frequency band), enlighten us that a sophisticated selection of neurons was recruited to the second assignment of the individuals. Thus, repercussions were exposed in higher processing hubs of the brain: the sensorimotor and premotor cortices; and the auditory cortex. Adding those results to a shorter reaction time verified and the increased number of precise responses at the second EEG moment, it is denoted the importance of the home-virtual-training for the familiarization, recognition and designation of the melodic interval in display. The present results show a bilateral collaboration of the brain in physiological functions related with the learning process of music.

The present work is not without limitations, mainly the small population in this research and the amount of cortical eletrophysiological coverage, as only 16 EEG channels were used. The comparison of naïve participants, regarding musical theory, with participants with former theoretical education in music would provide further evidences regarding the neural patterns inherent to musical harmony processing. Thus, on behalf of future studies, it would be interesting to have a comparison between musicians and non-musicians as a continuity of this investigation project. We can also suggest a wider number of EEG channels on display, aiming to validate cerebral hemisphere physiologic functionality and correlation with musical art expertise. Likewise, using neuroimaging techniques such as fNIR, as a way to complement and document changes in blood oxygenation and blood volume related to human brain function, would provide additional information into this question.

Conclusion

Art is a product of human creativity. It is a superior skill that can be learned by study, practice and observation [15]. With that provisos in mind, we conclude this study stating that cerebral activation, activity and frequencies in non-musicians which have acquired some theoretical musical knowledge exhibits visible differences in-between examinations. The human brain is very complex in the way it perceives information that it receives through sensory input. The two cerebral hemispheres perform different functions; the left hemisphere processes information that requires analysis or some type of language to comprehend, and the right hemisphere deals with the symbolic, non-verbal and emotional portion of what we call reality. The connection between brain and music is strong and bidirectional [15,19].

Disclosure

No conflict of interest to declare.

Financing

The present study was not financed by any entity.

References

- Levitin D. Neural correlates of musical behaviors: A brief overview. Music Therapy Perspectives. 2013;31(1):15-24.

- Binnie D, Prior P. Electroencephalography. J Neurol Neurosurg Psychiatry. 1994;57(11):1308-1319.

- Sucholeiki R, Selim B, Talavera F, et al. Normal EEG Waveforms MedScape. 2017.

- Klimesch W. Alpha-band oscillations, attention, and controlled access to stored information. Trends Cogn Sci. 2012;16(12):606-617.

- Zhang Y, Chen Y, Bressler S, et al. Response preparation and inhibition: The role of the cortical sensorimotor beta rhythm. Neuroscience. 2008;156(1):238-246.

- Pelt S, Heil L, Kwisthout J, et al. Beta- and gamma-band activity reflect predictive coding in the processing of causal events. Soc Cogn Affect Neurosci. 2016;11(6):973-980.

- Klimesch W. EEG alpha and theta oscillations reflect cognitive and memory performance: a review and analysis. Brain Res Brain Res Rev. 1999;29(2-3):169-195.

- EEG (Electroencephalography): The Definitive Pocket Guide. iMotions. 2016.

- Christov M, Dushanova J. Functional correlates of the aging brain: Beta frequency band responses to age-related cortical changes. Int J Neurorehabilitation. 2016;3(1):98-109.

- Meyer L. Emotional and Meaning in Music. The University of Chicago Press. 1956.

- Nichols E, Grahn J. Neural correlates of audiovisual integration in music reading. Neuropsychologia. 2016;91:199-210.

- Pallesen K, Brattico E, Bailey C, et al. Cognitive control in auditory working memory is enhanced in musicians. PLoS ONE. 2010;5(6):e11120.

- Schalles M, Pineda J. Musical sequence learning and EEG correlates of audiomotor processing. Behav Neurol. 2015.

- Bharucha J, Curtis M, Paroo K. Varieties of musical experience. Cognition. 2006;100(1):131-172.

- Demarin V, Bedeković M, Puretić M, et al. Arts, brain and cognition. Psychiatria Danubina. 2016;28(4):343-348.

- Potes C, Brunner P, Gunduz A, et al. Spatial and temporal relationships of electrocorticographic alpha and gamma activity during auditory processing. Neuroimage. 2014;97:188-195.

- Merchant H, Grahn J, Trainor L, et al. Finding the beat: a neural perspective across humans and non-human primates. Philos Trans R Soc Lond B Biol Sci. 2015;370:20140093.

- François C, Jaillet F, Takerkart S, et al. Faster sound stream segmentation in musicians than in nonmusicians. PLoS ONE. 2014;9(7):e101340.

- David J. The Two Sides of Music. 1994.

- WMA - The World Medical Association-WMA Declaration of Helsinki – Ethical Principles for Medical Research Involving Human Subjects. Wmanet. 2013.

- Sinha S, Sullivan L, Sabau D, et al. American Clinical Neurophysiology Society Guideline 1: Minimum technical requirements for performing clinical electroencephalography. Neurodiagn J. 2016;56(4):235-244.

- Viana M, Almeida P, Santos R. Adaptação portuguesa da versão reduzida do Perfil de Estados de Humor – POMS. Análise Psicológica. 2001;19(1):77-92.

- Delamore A, Fernsler T, Serby H, et al. Swartz center for computational neuroscience. Sccnucsdedu. 2016.

- Rana K, Vaina L. Functional Roles of 10 Hz Alpha-Band Power Modulating Engagement and Disengagement of Cortical Networks in a Complex Visual Motion Task. PLoS ONE. 2014;9(10):e107715.

- Behroozmand R, Ibrahim N, Korzyukov O, et al. Left-hemisphere activation is associated with enhanced vocal pitch error detection in musicians with absolute pitch. Brain Cogn. 2014;84(1):97-108.

- Fujioka T, Ross B, Trainor L. Beta-Band Oscillations Represent Auditory Beat and Its Metrical Hierarchy in Perception and Imagery. J Neurosci. 2015;35(45):15187-15198.

- Singer W. Distributed processing and temporal codes in neuronal networks. Cogn Neurodyn. 2009;3(3):189-196.

- Pfurtscheller G, Lopes da Silva F. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin Neurophysiol. 1999;110(11):1842-1857.